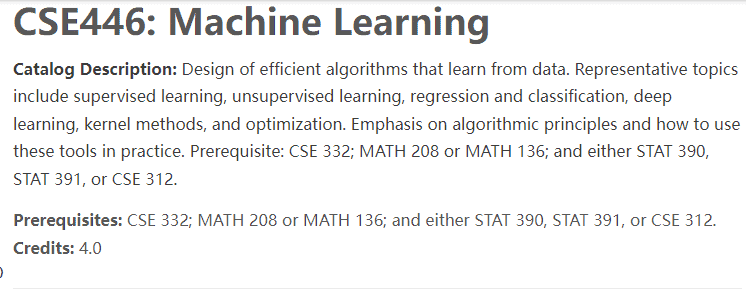

MY-ASSIGNMENTEXPERT™可以为您提供washington.edu CSE446 Machine Learning机器学习课程的代写代考和辅导服务!

CSE446课程简介

Catalog Description: Design of efficient algorithms that learn from data. Representative topics include supervised learning, unsupervised learning, regression and classification, deep learning, kernel methods, and optimization. Emphasis on algorithmic principles and how to use these tools in practice. Prerequisite: CSE 332; MATH 208 or MATH 136; and either STAT 390, STAT 391, or CSE 312.Prerequisites: CSE 332; MATH 208 or MATH 136; and either STAT 390, STAT 391, or CSE 312.

Credits: 4.0

Prerequisites

Programming in Python

We will use Python for the programming portions of the assignments. Python is a powerful general-purpose programming language with excellent libraries for statistical computations and visualizations. During the first week of the quarter, we will provide a tutorial to jump-start your transition into working in Python.

Here are some Python related resources:

www.learnpython.org “Whether you are an experienced programmer or not, this website is intended for everyone who wishes to learn the Python programming language.”

CSE446 Machine Learning HELP(EXAM HELP, ONLINE TUTOR)

Let $X$ and $Y$ be independent random variables with PDFs given by $f$ and $g$, respectively. Let $h$ be the PDF of the random variable $Z=X+Y$.

a. Show that $h(z)=\int_{-\infty}^{\infty} f(x) g(z-x) d x$. If you are more comfortable with discrete probabilities, you can instead derive an analogous expression for the discrete case, and then you should give a one sentence explanation as to why your expression is analogous to the continuous case..

To derive the PDF of $Z=X+Y$, we use the convolution formula for the PDFs of independent random variables:

$$h(z) = \int_{-\infty}^{\infty} f(x) g(z-x) dx$$

To see why this formula is valid, consider that the probability that $Z=X+Y$ takes on a value in the interval $[z, z+\Delta z)$ can be written as:

\begin{align*} \mathbb{P}(Z\in [z, z+\Delta z)) &= \int_{z}^{z+\Delta z} h(t) dt \ &= \int_{z}^{z+\Delta z} \int_{-\infty}^{\infty} f(x) g(t-x) dx dt \ &= \int_{-\infty}^{\infty} \int_{z}^{z+\Delta z} f(x) g(t-x) dt dx \ &= \int_{-\infty}^{\infty} f(x) \int_{z}^{z+\Delta z} g(t-x) dt dx \ &\approx \int_{-\infty}^{\infty} f(x) g(z-x) \Delta z dx \end{align*}

where we have used the fact that $f$ and $g$ are PDFs and hence normalized, and we have made use of the assumption that $X$ and $Y$ are independent to interchange the order of integration. This last expression is precisely the right-hand side of the convolution formula, and the approximation becomes exact in the limit as $\Delta z$ approaches zero. Therefore, we have shown that the formula $h(z)=\int_{-\infty}^{\infty} f(x) g(z-x) d x$ is valid for the PDF of $Z=X+Y$.

b. If $X$ and $Y$ are both independent and uniformly distributed on $[0,1]$ (i.e. $f(x)=g(x)=1$ for $x \in[0,1]$ and 0 otherwise) what is $h$, the PDF of $Z=X+Y$ ?

If $X$ and $Y$ are both independent and uniformly distributed on $[0,1]$, then their PDFs are given by:1 & \text{if } x\in [0,1]\\ 0 & \text{otherwise} \end{cases}$$ To find the PDF of $Z=X+Y$, we use the formula derived in part (a): $$h(z) = \int_{-\infty}^{\infty} f(x) g(z-x) dx = \int_{0}^{1} 1 \cdot \mathbb{I}_{[0,1]}(z-x) dx$$ where $\mathbb{I}_{[0,1]}$ is the indicator function, which is 1 if its argument is in the interval $[0,1]$, and 0 otherwise. Substituting $u=z-x$ and rearranging, we have: $$h(z) = \int_{\max(0,z-1)}^{\min(1,z)} 1 du = \begin{cases} z & \text{if } z\in [0,1]\\ 2-z & \text{if } z\in (1,2]\\ 0 & \text{otherwise} \end{cases}$$ Therefore, the PDF of $Z=X+Y$ when $X$ and $Y$ are both independent and uniformly distributed on $[0,1]$ is: $$h(z) = \begin{cases} z & \text{if } z\in [0,1]\\ 2-z & \text{if } z\in (1,2]\\ 0 & \text{otherwise} \end{cases}$$

MY-ASSIGNMENTEXPERT™可以为您提供UNIVERSITY OF ILLINOIS URBANA-CHAMPAIGN MATH2940 linear algebra线性代数课程的代写代考和辅导服务! 请认准MY-ASSIGNMENTEXPERT™. MY-ASSIGNMENTEXPERT™为您的留学生涯保驾护航。