MY-ASSIGNMENTEXPERT™可以为您提供handbook NEUR30006 Neural Networks神经网络课程的代写代考和辅导服务!

这是墨尔本大学复杂网络课程的代写成功案例。

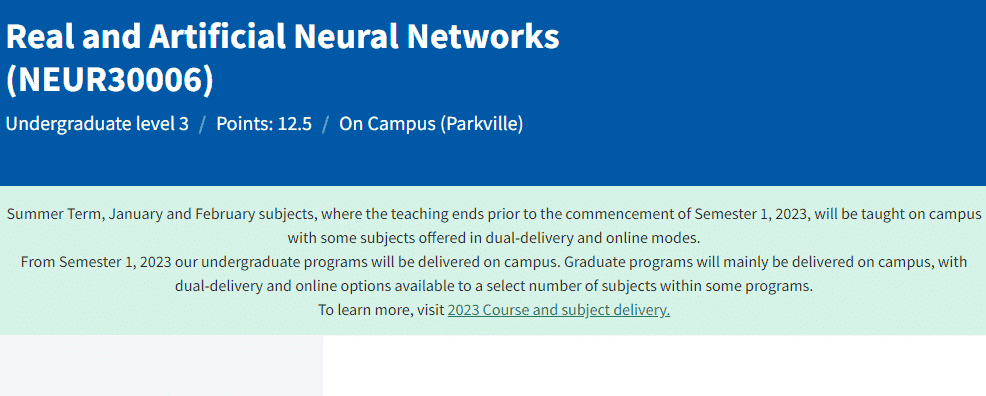

NEUR30006课程简介

Availability

Semester 2

Fees Look up fees

The analysis of real neural networks and the construction of artificial neural networks afford mutually synergistic technologies with broad application within and beyond neuroscience. Artificial neural networks, and other machine learning methods, have found numerous applications in analysis and modelling, and have produced insights into numerous complex phenomena (and generated huge economic value). Such technologies can also be used to gain insights into the biological systems that inspired their creation: we will explore how learning is instantiated in artificial and biological neural networks.

Prerequisites

The subject aims to provide foundation skills for those who may wish to peruse neuroscience – or any research or work environment that involves the creation or capture, and analysis, of complex data. Students will gain experience with digital signals and digital signal processing (whether those signals are related to images, molecular data, connectomes, or electrophysiological recordings), and will learn how to conceptualise and implement approaches to modelling data by constructing an artificial neural network using the Python programming language.

NEUR30006 Neural Networks HELP(EXAM HELP, ONLINE TUTOR)

Course Policies

Go to the course website and read the course policies carefully. Leave a followup in the Homework 0, Question 2 thread on Ed if you have any questions. Are the following situations violations of course policy? Write “Yes” or “No”, and a short explanation for each.

(a) Alice and Bob work on a problem in a study group. They write up a solution together and submit it, noting on their submissions that they wrote up their homework answers together.

(b) Carol goes to a homework party and listens to Dan describe his approach to a problem on the board, taking notes in the process. She writes up her homework submission from her notes, crediting Dan.

(c) Erin gets frustrated by the fact that a homework problem given seems to have nothing in the lecture, notes, or discussion that is parallel to it. So, she starts searching for the problem online. She finds a solution to the homework problem on a website. She reads it and then, after she has understood it, writes her own solution using the same approach. She submits the homework with a citation to the website.

(d) Frank is having trouble with his homework and asks Grace for help. Grace lets Frank look at her written solution. Frank copies it onto his notebook and uses the copy to write and submit his homework, crediting Grace.

(e) Heidi has completed her homework. Her friend Irene has been working on a homework problem for hours, and asks Heidi for help. Heidi sends Irene her photos of her solution through an instant messaging service, and Irene uses it to write her own solution with a citation to Heidi.

Gradient Descent Doesn’t Go Nuts with Ill-Conditioning

Consider a linear regression problem with $n$ training points and $d$ features. When $n=d$, the feature matrix $F \in \mathbb{R}^{n \times n}$ has some maximum singular value $\alpha$ and an extremely tiny minimum singular value. We have noisy observations $\mathbf{y}=F \mathbf{w}^+\epsilon$. If we compute $\hat{\mathbf{w}}{i n v}=F^{-1} \mathbf{y}$, then due to the tiny singular value of $F$ and the presence of noise we observe that $\left|\hat{\mathbf{w}}{i n v}-\mathbf{w}^\right|_2=10^{10}$.

Suppose instead of inverting the matrix we decide to use gradient descent instead. We run $k$ iterations of gradient descent to minimize the loss $\ell(w)=\frac{1}{2}|\mathbf{y}-F \mathbf{w}|^2$ starting from $\mathbf{w}_0=\mathbf{0}$. We use a learning rate $\eta$ which is small enough that gradient descent cannot possibly diverge for the given problem. (This is important. You will need to use this.)

The gradient-descent update for $t>0$ is:

$$

\mathbf{w}t=\mathbf{w}{t-1}-\eta\left(F^{\top}\left(F \mathbf{w}{t-1}-\mathbf{y}\right)\right) $$ We are interested in the error $\left|\mathbf{w}_k-\mathbf{w}^\right|_2^2$. We want to show that in the worst case, this error can grow at most linearly with iterations $k$ and in particular $\left|\mathbf{w}_k-\mathbf{w}^\right|_2 \leq k \eta \alpha|\mathbf{y}|_2+\left|\mathbf{w}^*\right|_2$.

i.e. The error cannot go “nuts,” at least not very fast.

For the purposes of the homework, you only have to prove the key idea, since the rest follows by applying induction and the triangle inequality.

Show that for $t>0,\left|\mathbf{w}_t\right|_2 \leq\left|\mathbf{w}{t-1}\right|_2+\eta \alpha|\mathbf{y}|_2$.

(HINT: What do you know about $\left(I-\eta F^{\top} F\right.$ ) if gradient descent cannot diverge? What are its eigenvalues like? Use this fact.)

Regularization from the Augmentation Perspective

Assume $\mathbf{w}$ is a $d$-dimensional Gaussian random vector $\mathbf{w} \sim \mathcal{N}(\mathbf{0}, \Sigma)$ and $\Sigma$ is symmetric positive-definite. Our model for how the $\left{y_i\right}$ training data is generated is

$$

y=\mathbf{w}^{\top} \mathbf{x}+Z, \quad Z \sim \mathcal{N}(0,1)

$$

where the noise variables $Z$ are independent of $\mathbf{w}$ and iid across training samples. Notice that all the training $\left{y_i\right}$ and the parameters $w$ are jointly normal/Gaussian random variables conditioned on the training inputs $\left{\mathbf{x}_i\right}$. Let us define the standard data matrix and measurement vector:

$$

X=\left[\begin{array}{c}

\mathbf{x}_1^T \

\mathbf{x}_2^T \

\vdots \

\mathbf{x}_n^T

\end{array}\right], \quad \mathbf{y}=\left[\begin{array}{c}

y_1 \

y_2 \

\vdots \

y_n

\end{array}\right]

$$

In this model, the MAP estimate of $\mathbf{w}$ is given by the Tikhonov regularization counterpart of ridge regression:

$$

\widehat{\mathbf{w}}=\left(X^{\top} X+\Sigma^{-1}\right)^{-1} X^{\top} \mathbf{y}

$$

In this question, we explore Tikhonov regularization from the data augmentation perspective.

Define the matrix $\Gamma$ as a $d \times d$ matrix that satisfies $\Gamma^{\top} \Gamma=\Sigma^{-1}$. Consider the following augmented design matrix (data) $\hat{X}$ and augmented measurement vector $\hat{y}$ :

$$

\hat{X}=\left[\begin{array}{c}

X \

\Gamma

\end{array}\right] \in \mathbb{R}^{(n+d) \times d}, \quad \text { and } \quad \hat{\mathbf{y}}=\left[\begin{array}{c}

\mathbf{y} \

\mathbf{0}_d

\end{array}\right] \in \mathbb{R}^{n+d}

$$

where $\mathbf{0}_d$ is the zero vector in $\mathbb{R}^d$. Show that the ordinary least squares problem

$$

\underset{\mathbf{w}}{\operatorname{argmin}}|\hat{\mathbf{y}}-\hat{X} \mathbf{w}|_2^2

$$

has the same solution as (2).

HINT: Feel free to just use the formula you know for the OLS solution. You don’t have to rederive that. This problem is not intended to be hard or time consuming.

MY-ASSIGNMENTEXPERT™可以为您提供HANDBOOK NEUR30006 NEURAL NETWORKS神经网络课程的代写代考和辅导服务!