MY-ASSIGNMENTEXPERT™可以为您提供math.tamu.edu MATH609 Numerical analysis数值分析课程的代写代考和辅导服务!

MATH609课程简介

Next week (the week starting Oct. 5) is the middle of the semester and as promised in the syllabus, we shall have a midterm. This exam will be a take home but with a fairly restrictive timeline, namely, the exam will be sent to you on Wednesday Oct. 7 at 9:00 am and must be completed and EMAILED back to me ([email protected]) by 3:00 PM that day. The six hour window should suffice as I expect that most will be able to essentially finish in under two. In lieu of the this, there will be no new homework assigned the week before and only one lecture during the week of the exam.

Prerequisites

I am teaching two versions of 609 this semester. 609-700 is for students in the math department’s distance masters program while this course, 609-600 is for students in residence at TAMU, College Station. The class homepage for this course is located at:http://www.math.tamu.edu/~pasciak/classes/609-Local

MATH609 Numerical analysis HELP(EXAM HELP, ONLINE TUTOR)

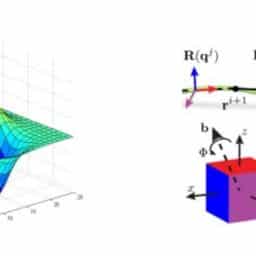

(2) $\left(4+h^2\right) x_{i, j}-x_{i-1, j}-x_{i+1, j}-x_{i, j-1}-x_{i, j+1}=h^2, x_{0, j}=x_{n+1, j}=x_{i, 0}=x_{i, n+1}=0$.

Here $h=1 /(n+1)$ so that the system represents a finite difference approximation of the boundary value problem $-\Delta u+u=1$ in $\Omega=(0,1) \times(0,1)$ and $u=0$ on the boundary of $\Omega$. Take $n=8,16,32$.

$x_{-}{i, j+1}=h^{\wedge} 2, x_{-}{0, j}=x_{-}{n+1, j}=x_{-}{i, 0}=x_{-}{i, n+1}=0 \$$, we can use the Jacobi iterative method, which is a simple iterative method that can converge to the solution of a linear system under certain conditions.

The Jacobi method involves updating each element of the solution vector $\$ \times \$$ based on the current values of its neighbors. Specifically, for each $\$ i, j \$$, we compute:

$$

x_{i, j}^{(k+1)}=\frac{1}{4+h^2}\left(x_{i-1, j}^{(k)}+x_{i+1, j}^{(k)}+x_{i, j-1}^{(k)}+x_{i, j+1}^{(k)}+h^2\right),

$$

where $\$ x_{-}{i, j}^{\wedge}{(k)} \$$ denotes the $\$ i, j \$$-th element of the solution vector after $\$ \mathrm{k} \$$ iterations.

We can use this formula to iteratively update the solution vector until it converges to a sufficiently accurate approximation of the true solution. One way to check for convergence is to monitor the size of the residual, defined as $\$ r=A x-b \$$, where $\$ A \$$ is the coefficient matrix of the system, $\$ x \$$ is the current approximation of the solution, and $\$ b \$$ is the right-hand side vector. If the residual is small enough, we can stop iterating and output the current approximation as the solution.

In all problems take a r.h.s. $b=h^2(1,1, \ldots 1)^t$ and $x^0=(0,0, \ldots, 0)^t$ or $x^0=b$.

In the context of iterative methods for linear systems, the choice of initial guess can have a significant impact on the convergence behavior of the method. Specifically, if the initial guess is too far from the true solution, it may take many iterations for the method to converge to an acceptable accuracy, or it may not converge at all.

In the case of the systems given in this problem, we are told to use $b=h^2(1,1, \ldots 1)^t$ as the right-hand side vector and $x^0=(0,0, \ldots, 0)^t$ or $x^0=b$ as the initial guess for the solution vector. The choice of $b$ is not particularly important for convergence, as long as it is a valid right-hand side vector for the given system. However, the choice of $x^0$ can have a significant impact on convergence.

If we choose $x^0=(0,0, \ldots, 0)^t$, we are starting with an initial guess that is very far from the true solution. In general, this will make convergence slower and require more iterations. On the other hand, if we choose $x^0=b$, we are starting with an initial guess that is closer to the true solution. In some cases, this may lead to faster convergence and fewer iterations.

However, the choice of initial guess also depends on the specific iterative method being used, as well as the properties of the coefficient matrix of the system. For example, some iterative methods may be less sensitive to the choice of initial guess, while others may require a good initial guess to converge at all. Similarly, the properties of the coefficient matrix (such as its condition number) can affect the convergence behavior of the method, and may require specific techniques (such as preconditioning) to improve convergence.

In summary, while the choice of initial guess can have a significant impact on convergence, it is not always straightforward to determine the best choice without additional information about the system and the iterative method being used.

MY-ASSIGNMENTEXPERT™可以为您提供UNIVERSITY OF ILLINOIS URBANA-CHAMPAIGN MATH2940 linear algebra线性代数课程的代写代考和辅导服务! 请认准MY-ASSIGNMENTEXPERT™. MY-ASSIGNMENTEXPERT™为您的留学生涯保驾护航。