凸优化很难学?

学霸老司机,带你飞!

如何快速学好随机过程?随机过程怎么上手?

第一步是了解什么是随机过程,随机过程中的一些重要参量以及知道随机过程主要分为:

1.高斯过程(研究手段是correlation)

2.独立增量过程(泊松过程,布朗运动)

3.Markov chain(Markov property)

4.Martingale(鞅)

如果以后想去华尔街做金融,SDE/SPDE(基于PDE/概率论/随机)是上街面试第一关(虽然工作后很可能用不着)。很多Optimal Pricing的研究就基于找martigingle. 另外Martingale对于理论研究也真的很重要,会用Martingale技巧的人不愁水不出论文。大名鼎鼎的Jean Bourgain就是使用Martingale的高手,用Martingale解决了好几个open problem。

运筹学和optimazation方面,排队论/马氏链Markov chain在OR/OM用处大大。

information theory方面,NLP(如机器翻译,手写识别)可基于隐马/贝叶斯模型。

在随机过程取得好成绩。好的教材和指导老师很重要,好的教材就像讲一个故事,深入浅出。入门书籍推荐Sheldon Ross的经典《Stochastic Process》,这也是很多大学的教科书,只需要初等概率论和微积分的知识。

重点是条件期望和贝叶斯公式,其实这门课翻来覆去就用这个核心技巧。

随机过程代写|random process代写|Markov chain代写|Martingale代写

UpriviateTA代写,做自己擅长的领域!UpriviateTA的专家精通各种经典论文、方程模型、通读各种完整书单,比如代写和线性代数密切相关的Matrix theory, 各种矩阵形式的不等式,比如Wely inequality, Min-Max principle,polynomial method, rank trick那是完全不在话下,对于各种singularity value decomposition, LLS, Jordan standard form ,QR decomposition, 基于advance的LLS的optimazation问题,乃至于linear programming method(8维和24维Sphere packing的解决方案的关键)的计算也是熟能生巧正如一些大神所说的,代写线性代数留学生线性代数作业assignment论文paper的很多方法都是围绕几个线性代数中心问题的解决不断产生的。比如GIT,从klein时代开始研究的几何不变量问题,以及牛顿时代开始研究的最优化问题等等(摆线),此外,强烈推荐我们UpriviateTA代写的线性代数这门课,从2017年起,我们已经陆续代写过近千份类似的作业assignment。

以下就是一份典型随机过程案例的答案

随机过程代写|random process代写|Markov chain代写|Martingale代写

Consider a random process $X(t)$ defined by

$$

X(t)=\sin \left(2 \pi f_{c} t\right)

$$

in which the frequency $f_{c}$ is a random variable uniformly distributed over the range $[0, W]$. Show that $X(t)$ is nonstationary. Hint: Examine specific sample functions of the random process $X(t)$ for the frequency $f=W / 2, W / 4$ and $W$ say.

An easy way to solve this problem is to find the mean of the random process $X(t)$

$$

E[X(t)]=\frac{1}{W} \int_{0}^{W} \sin (2 \pi f t) d f=\frac{1}{W}[1-\cos (2 \pi W t)]

$$

Clearly $E[X(t)]$ is a function of time and hence the process $X(t)$ is not stationary.

A stationary Gaussian process $X(t)$ has zero mean and power spectral density $S_{X}(f) .$ Determine the probability density function of a random variable obtained by observing the process $X(t)$ at some time $t_{k}$

SOLUTION: Let $\mu(x)$ be the mean and $\sigma^{2}(x)$ be the variance of the random variable $X_{k}$ obtained by observing the random process at time $t_{k} .$ Then,

$$

\begin{array}{c}

\mu_{x}=0 \\

\sigma_{x}^{2}=E\left[X_{k}^{2}\right]-\mu_{x}^{2}=E\left[X_{k}^{2}\right]

\end{array}

$$

We note that

$$

\sigma_{x}^{2}=E\left[X_{k}^{2}\right]=\int_{-\infty}^{\infty} S_{X}(f) d f

$$

The PDF of Gaussian random variable $X_{k}$ is given by

$$

f_{X_{k}}(x)=\frac{1}{\sqrt{2 \pi \sigma_{x}^{2}}} \exp \left(\frac{-x^{2}}{2 \sigma_{x}^{2}}\right)

$$

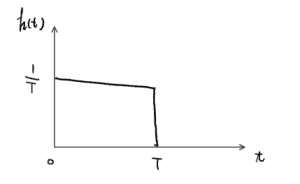

A stationary Gaussian process $X(t)$ with zero mean and power spectral density $S_{X}(f)$ is applied to a linear filter whose impulse response $h(t)$ is shown in FIGURE. A sample $Y$ is

taken of the random process at the filter output at time $T$.

(a) Determine the mean and variance of $\mathrm{Y}$

The filter output is

$$

\begin{aligned}

Y(t) &=\int_{-\infty}^{\infty} h(\tau) X(t-\tau) d \tau \\

&=\frac{1}{T} \int_{0}^{T} X(T-\tau) d \tau

\end{aligned}

$$

Put $T-\tau=u$. Then the sample value of $Y(t)$ at $t=T$ equals

$$

Y=\frac{1}{T} \int_{0}^{T} X(u) d u

$$

The mean of $Y$ is therefore

$$

\begin{aligned}

E[Y] &=E\left[\frac{1}{T} \int_{0}^{T} X(u) d u\right] \\

&=\frac{1}{T} \int_{0}^{T} E[X(u)] d u \\

&=0

\end{aligned}

$$

Variance of $Y$

$$

\begin{aligned}

\sigma_{Y}^{2} &=E\left[Y^{2}\right]-E[Y]^{2} \\

&=R_{Y}(0) \\

&=\int_{-\infty}^{\infty} S_{Y}(f) d f \\

&=\int_{-\infty}^{\infty} S_{X}(f)|H(f)|^{2} d f \\

&=\int_{-\infty}^{\infty} h(t) \exp (-j 2 \pi f t) d t \\

&=\frac{1}{T} \int_{0}^{T} \exp (-j 2 \pi f t) d t \\

&=\sin c(f T) \exp (-j \pi f T)

\end{aligned}

$$

Therefore,

$$

\sigma_{Y}^{2}=\int_{-\infty}^{\infty} S_{X}(f) \sin c^{2}(f T) d f

$$

(b) What is the probability density function of $Y ?$

Since the filter output is Gaussian, it follows that $Y$ is also Gaussian. Hence the PDF of $Y$ is

$$

f_{Y}(y)=\frac{1}{\sqrt{2 \pi \sigma_{Y}^{2}}} \exp \left(\frac{-y^{2}}{2 \sigma_{Y}^{2}}\right)

$$

Let $X_{n}$ be WSS and $X_{n}=Y_{n+n_{o}}$ for some fix $n_{o} \geq 0 .$ Let

$$

S_{Y}(f)=\frac{1-a^{2}}{\left(1-a z^{-1}\right)(1-a z)}

$$

for some $a<1$ Find the Wiener filter for $\hat{X}_{n}=L\left[X_{n} \mid Y^{n}\right]$ and compute the minimum error.

The important quantities are:

$$

\begin{array}{l}

H(z)=\frac{\sqrt{1-a^{2}}}{1-a z^{-1}} \\

S_{x y}(z)=z^{n_{o}} S_{y}(z)

\end{array}

$$

Thus the causal Wiener filter is given by

$$

\begin{aligned}

W(z) &=H^{-1}(z)\left[H(z) S_{x y}(z) S_{y}^{-1}(z)\right]_{+} \\

&=H^{-1}(z)\left[z^{n_{o}} H(z)\right]_{+} \\

&=\frac{1-a z^{-1}}{\sqrt{1-a^{2}}}\left[z^{n_{o}} \frac{\sqrt{1-a^{2}}}{1-a z^{-1}}\right]_{+} \\

&=\left(1-a z^{-1}\right) a^{n_{o}} \frac{1}{1-a z^{-1}} \\

&=a^{n_{o}}

\end{aligned}

$$

Thus

$$

w(n)=a^{n} \delta\left(n-n_{o}\right) \quad \text { and } \quad \hat{x}(n)=a^{n_{o}} y(n)=a^{n_{o}} x\left(n-n_{o}\right)

$$

The minimum error is given by

$$

\begin{aligned}

\epsilon_{\min }(n)=E\left[(x(n)-\hat{x}(n))^{2}\right] &=E\left[(x(n)-\hat{x}(n))(x(n)-\hat{x}(n))^{*}\right] \\

(\text { proj. principle }) &=E\left[(x(n)-\hat{x}(n)) x(n)^{*}\right] \\

&=E\left[x(n) x^{*}(n)\right]-E\left[x(n) \hat{x}^{*}(n)\right] \\

&=R_{x}(0)-E\left[x(n) a^{n_{o}} x\left(n-n_{o}\right)\right] \\

&=R_{x}(0)-a^{n_{o}} R_{x}\left(n_{o}\right) \\

&=1-a^{2 n_{0}}

\end{aligned}

$$

更多Stochastic Process代写随机过程代写案例请参阅此处。

E-mail: [email protected] 微信:shuxuejun

uprivate™是一个服务全球中国留学生的专业代写公司

专注提供稳定可靠的北美、澳洲、英国代写服务

专注于数学,统计,金融,经济,计算机科学,物理的作业代写服务