Module 1

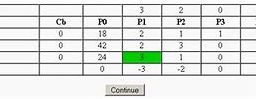

1. Examine the following output from the summary(lm()) (similar to Lecture 02): ## ## Call: ## lm(formula = Mobility ~ Income + Gini + Middle_class + Violent_crime, ## data = mob) ## ## Residuals: ## Min 1Q Median 3Q Max ## -0.082921 -0.025877 -0.009061 0.017791 0.211153 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 2.019e-02 2.568e-02 0.786 0.43202 ## Income 1.029e-07 2.747e-07 0.375 0.70813 ## Gini -1.282e-01 2.845e-02 -4.505 7.82e-06 *** ## Middle_class 2.406e-01 2.928e-02 8.218 1.05e-15 *** ## Violent_crime -3.123e+00 1.070e+00 -2.920 0.00362 ** ## — ## Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ‘ 1 ## ## Residual standard error: 0.03886 on 677 degrees of freedom ## (59 observations deleted due to missingness) ## Multiple R-squared: 0.3631, Adjusted R-squared: 0.3593 ## F-statistic: 96.49 on 4 and 677 DF, p-value: < 2.2e-16 For each statement, indicate whether it is true or false. a. Based only on the p-value, we should remove Income to improve expected test MSE. b. Based only on the p-value, we should include Gini to improve expected test MSE c. Adding another variable will definitely increase R2 . d. Adding another variable will definitely increase AIC

For each statement, indicate whether it is true or false.

a. Based only on the $p$-value, we should remove Income to improve expected test MSE.

b. Based only on the $p$-value, we should include Gini to improve expected test MSE

c. Adding another variable will definitely increase $R^{2}$.

d. Adding another variable will definitely increase AIC.

FALSE/FALSE/TRUE/FALSE. One should not use p-values to determine predictive models. Adding covariates always increases $R^{2}$. AIC may increase or decrease (can’t tell without more information).

Suppose I want to estimate $E[Y \mid X]$ (the regression function). Is it possible to make the prediction risk (expected squared error) arbitrarily small by allowing our estimate $\hat{f}(X)$ to be more complex?

While we can make training error arbitrarily small, making $f$ more complex may reduce bias at the expense of variance, and it does not effect the intrinsic error.

State two advantages of using leave one out cross validation (LOOCV) rather than K-fold CV.

- LOOCV has lower bias as an estimate of prediction risk.

- LOOCV may be easier to compute (closed-form if a linear smoother).

- If there are categorical predictors, K-fold may “drop levels”. This is super weird/annoying. LOOCV is less likely to do this.

Given a data set $y_{1}, \ldots, y_{n}$ the function $f: R \rightarrow R$ given by $f(a)=\frac{1}{n} \sum_{i=1}^{n}\left(y_{i}-a\right)^{2}$ is minimized at $a_{0}=\overline{y_{n}}$. The last sentence is:

a. always true

b. true only if $\overline{y_{n}} \geq 0$

c. true only if $\overline{y_{n}} \leq 0$

d. trué only if $\sum_{i=1}^{n} y_{i}^{2} \leq \sum_{i=1}^{n}\left(y_{i}-\overline{y_{n}}\right)^{2}$

This is always true. $f$ is convex, taking the derivative, setting to 0 , and solving gives the solution.

(Challenging) Suppose I have 100 observations from the linear model

$$

Y_{i}=\beta_{0}+\sum_{j=1}^{p} x_{i j} \beta_{j}+\epsilon_{i}

$$

where $\epsilon_{i}$ is independent and identically distributed Gaussian with mean 0 and variance $\sigma^{2}$. I want to predict new observation $Y_{101}$. I predict with $\widehat{Y}_{101}=0$. What is my expected test MSE?

$$

\operatorname{MSE}(0)=E\left[\left(Y_{101}-0\right)^{2}\right]=\operatorname{bias}^{2}(0)+\operatorname{Var}(0)+\operatorname{irreducible} \text { error }=\left(-\beta_{0}-\sum_{j=1}^{p} x_{101, j} \beta_{j}\right)^{2}+0+\sigma^{2}

$$

Alternatively, one could use:

$$

E\left[\left(Y_{101}-0\right)^{2}\right]=E\left[Y_{101}^{2}\right]=E\left[Y_{101}\right]^{2}+\operatorname{Var}\left(Y_{101}\right)=\left(\beta_{0}+\sum_{j=1}^{p} x_{101, j} \beta_{j}\right)^{2}+\sigma^{2}

$$

to get the same answer.

This question combined two concepts: the bias/variance/irreducible error decomposition with the definitions of bias and variance. Students last year found it very challenging.

MODULE 2

Define what it means for a regression method to be a “linear smoother”.

“linear smoother” means that there exists some matrix $\mathbf{S}$ such that the fitted values (in-sample predictions) $\widehat{\mathbf{y}}$ can be produced by the formula $\widehat{\mathbf{y}}=\mathbf{S y}$.

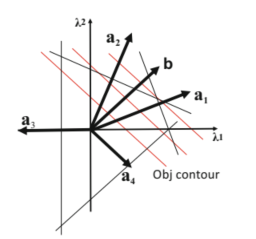

Consider a classification problem with 2 classes and a 2-dimensional vector of predictor variables. Assume that the class conditional densities are Gaussian with mean vectors $\mu_{1}=(1,1)^{\top}$ and $\mu_{0}=(-0.5,-0.5)^{\top}$ and common covariance matrix $\Sigma=\left[\begin{array}{cc}1 / 2 & 0 \ 0 & 1 / 4\end{array}\right]$. Recall that the density of a multivariate Gaussian random variable $W$ in $p$ dimensions with mean $\mu$ and covariance $A$ is given by

$$

f(w)=\frac{1}{(2 \pi)^{p / 2}|A|^{1 / 2}} \exp \left(-\frac{1}{2}(w-\mu)^{\top} A^{-1}(w-\mu)\right) .

$$

Suppose $\operatorname{Pr}(y=1)=0.75$. To which class would the Bayes Classifier assign the point $x=(.5, .5)^{\top} ?$

a. Class 1

b. Class 0

c. We do not have enough information to answer.

d. $\operatorname{Pr}(Y=1 \mid X=x)=\operatorname{Pr}(Y=0 \mid X=x)=1 / 2$ (we’re indifferent, so flip a coin)

Class 1 (a) is correct. We have $f_{1}(x)>f_{0}(x)$ so this would be true even if $\operatorname{Pr}(Y=1)$ were smaller than .5 (you’d have to calculate the values to see this)

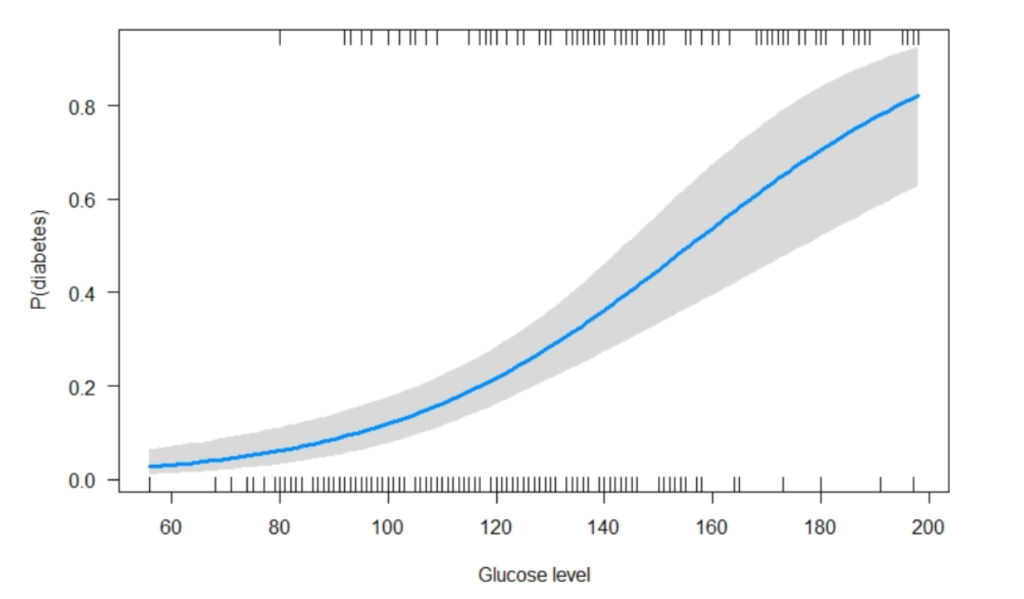

Which of the following are TRUE about the estimated coefficient vector $\hat{\beta}$ from logistic regression?

a. $P(Y=1 \mid X=x)$ is linear in $\hat{\beta}$.

b. the log-odds of $Y$ is linear in $\hat{\beta}$.

c. A 1-unit channge in $x_{1}$ results in a $\hat{\beta}{1}$ unit change in the $\log$ odds of $Y$. d. The change in $P(Y=1 \mid X=x)$ for a 1-unit change in $x{1}$ is given by the following formula

$$

\frac{1}{1+e^{-\hat{\beta}{0}-\hat{\beta}{1} x_{1}-\sum_{j=2}^{p} x_{j} \hat{\beta}{j}}}-\frac{1}{1+e^{-\hat{\beta}{0}-\hat{\beta}{1}\left(x{1}+1\right)-\sum_{j=2}^{p} x_{j} \hat{\beta}_{j}}}

$$

a. FALSE.

b. TRUE.

c. FALSE (True if we add “holding all other $x_{j} j \neq 1$ constant”)

d. TRUE (see page 137 of the text, and note that this change depends on the value of $x_{1}$ as well as all the other variables. This behavior is challenging to interpret.)

数学代考,运筹学代写Operations Research请认准UprivateTA™. UprivateTA™为您的留学生涯保驾护航。

Fourier analysis代写

微分几何代写

离散数学代写

Partial Differential Equations代写可以参考一份偏微分方程midterm答案解析