统计代写| Gibbs sampling stat代写

统计代考

12.2 Gibbs sampling

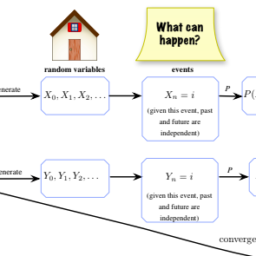

Gibbs sampling is an MCMC algorithm for obtaining approximate draws from a joint distribution, based on sampling from conditional distributions one at a time: at each stage, one variable is updated (keeping all the other variables fixed) by drawing from the conditional distribution of that variable given all the other variables. This approach is especially useful in problems where these conditional distributions are pleasant to work with.

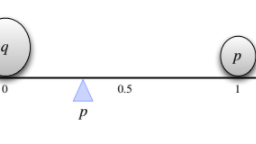

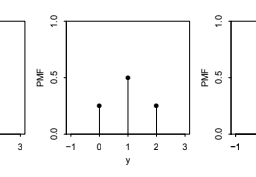

First we will run through how the Gibbs sampler works in the bivariate case, where the desired stationary distribution is the joint PMF of discrete r.v.s $X$ and $Y$. There are several forms of Gibbs samplers, depending on the order in which updates are done. We will introduce two major kinds of Gibbs sampler: systematic scan, in which the updates sweep through the components in a deterministic order, and random scan, in which a randomly chosen component is updated at each stage.

Algorithm 12.2.1 (Systematic scan Gibbs sampler). Let $X$ and $Y$ be discrete r.v.s with joint PMF $p_{X, Y}(x, y)=P(X=x, Y=y)$. We wish to construct a two-dimensional Markov chain $\left(X_{n}, Y_{n}\right)$ whose stationary distribution is $p_{X, Y}$. The systematic scan Gibbs sampler proceeds by updating the $X$-component and the $Y$-component in alternation. If the current state is $\left(X_{n}, Y_{n}\right)=\left(x_{n}, y_{n}\right)$, then we the $Y$-component while holding the $X$-component fixed:

- Draw $x_{n+1}$ from the conditional distribution of $X$ given $Y=y_{n}$, and set $X_{n+1}=x_{n+1}$.

- Draw $y_{n+1}$ from the conditional distribution of $Y$ given $X=x_{n+1}$, and set $Y_{n+1}=y_{n+1}$.

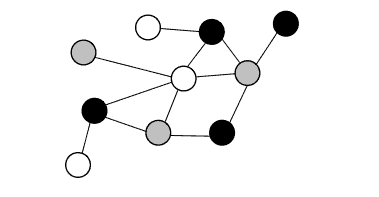

Repeating steps 1 and 2 over and over, the stationary distribution of the chain $\left(X_{0}, Y_{0}\right),\left(X_{1}, Y_{1}\right),\left(X_{2}, Y_{2}\right), \ldots$ is $p_{X, Y}$. Algorithm 12.2.2 (Random scan Gibbs sampler). As above, let $X$ and $Y$ be discrete r.v.s with joint PMF $p_{X, Y}(x, y)$. We wish to construct a two-dimensional Markov chain Monte Carlo Markov chain ( $X_{n}, Y_{n}$ ) whose stationary distribution is $p_{X, Y}$. Each move of the random scan Gibbs sampler picks a uniformly random component and updates it, according to the conditional distribution given the other component: 1. Choose which component to update, with equal probabilities. 2. If the $X$-component was chosen, draw a value $x_{n+1}$ from the condi- tional distribution of $X$ given $Y=y_{n}$, and set $X_{n+1}=x_{n+1}, Y_{n+1}=y_{n} .$ Similarly, if the $Y$-component was chosen, draw a value $y_{n+1}$ from the con- ditional distribution of $Y$ given $X=x_{n}$, and set $X_{n+1}=x_{n}, Y_{n+1}=y_{n+1}$. Repeating steps 1 and 2 over and over, the stationary distribution of the chain sequence of $d$-dimensional random vectors. At each stage, we choose one component of the vector to update, and we draw from the conditional distribution of that component given the most recent values of the other components. We can either cycle through the components of the vector in a systematic order, or choose a random component to update each time. Gibbs sampling generalizes naturally to higher dimensions. If we want to sample from a $d$-dimensional joint distribution, $\left(X_{2}, Y_{2}\right), \ldots$ is $p_{X, Y}$.

The Gibbs sampler is less flexible than the Metropolis-Hastings algorithm in the sense that we don’t get to choose a proposal distribution; this also makes it simpler in the sense that we don’t have to choose a proposal distribution. The flavors of Gibbs distributions while Metropolis-Hastings emphasizes acceptance probabilities. But the algorithms are closely connected, as we show below.

Theorem 12.2.3 (Random scan Gibbs as Metropolis-Hastings). The random scan Gibbs sampler is a special case of the Metropolis-Hastings algorithm, in which the proposal is always accepted. In particular, it follows that the stationary distribution of the random scan Gibbs sampler is as desired.

Proof. We will show this in two dimensions, but the proof is similar in any dimension. Let $X$ and $Y$ be discrete r.v.s whose joint PMF is the desired stationary distribution. Let’s work out what the Metropolis-Hastings algorithm says to do, using the following proposal distribution: from $(x, y)$, randomly update one coordinate by running one move of the random scan Gibbs sampler.

To simplify notation, write

$P(X=x, Y=y)=p(x, y), P(Y=y \mid X=x)=p(y \mid x), P(X=x \mid Y=y)=p(x \mid y) .$

More formally, we should write $p_{Y \mid X}(y \mid x)$ instead of $p(y \mid x)$, to avoid issues like wondering what $p(5 \mid 3)$ means. But writing $p(y \mid x)$ is more compact and does not create ambiguity in this proof.

Let’s compute the Metropolis-Hastings acceptance probability for going from $(x, y)$

统计代考

12.2 吉布斯抽样

Gibbs 抽样是一种 MCMC 算法,用于从联合分布中获得近似抽取,基于一次一个条件分布的抽样:在每个阶段,通过抽取该变量的条件分布来更新一个变量(保持所有其他变量不变)变量给定所有其他变量。这种方法在处理这些条件分布很愉快的问题中特别有用。

首先,我们将介绍 Gibbs 采样器如何在双变量情况下工作,其中所需的平稳分布是离散 r.v.s $X$ 和 $Y$ 的联合 PMF。 Gibbs 采样器有多种形式,具体取决于更新完成的顺序。我们将介绍两种主要的 Gibbs 采样器:系统扫描,其中更新以确定的顺序扫描组件,以及随机扫描,其中在每个阶段更新随机选择的组件。

算法 12.2.1(系统扫描吉布斯采样器)。让 $X$ 和 $Y$ 是离散的 r.v.s,联合 PMF $p_{X, Y}(x, y)=P(X=x, Y=y)$。我们希望构建一个二维马尔可夫链 $\left(X_{n}, Y_{n}\right)$,其平稳分布为 $p_{X, Y}$。系统扫描吉布斯采样器通过交替更新$X$-分量和$Y$-分量进行。如果当前状态是 $\left(X_{n}, Y_{n}\right)=\left(x_{n}, y_{n}\right)$,那么我们在持有$X$-组件已修复:

- 给定$Y=y_{n}$,从$X$的条件分布中抽取$x_{n+1}$,设$X_{n+1}=x_{n+1}$。

- 给定$X=x_{n+1}$,从$Y$的条件分布中抽取$y_{n+1}$,设$Y_{n+1}=y_{n+1}$。

一遍又一遍地重复步骤 1 和 2,链的平稳分布 $\left(X_{0}, Y_{0}\right),\left(X_{1}, Y_{1}\right),\left (X_{2}, Y_{2}\right), \ldots$ 是 $p_{X, Y}$。算法 12.2.2(随机扫描吉布斯采样器)。如上所述,让 $X$ 和 $Y$ 是具有联合 PMF $p_{X, Y}(x, y)$ 的离散 r.v.s。我们希望构建一个二维马尔可夫链蒙特卡洛马尔可夫链($X_{n}, Y_{n}$),其平稳分布为$p_{X, Y}$。随机扫描吉布斯采样器的每一次移动都会选择一个均匀随机的分量并根据给定另一个分量的条件分布对其进行更新: 1. 以相等的概率选择要更新的分量。 2. 如果选择了$X$-分量,在$Y=y_{n}$ 的情况下,从$X$ 的条件分布中抽取一个值$x_{n+1}$,并设置$X_{n+ 1}=x_{n+1}, Y_{n+1}=y_{n} .$ 类似地,如果选择了 $Y$-组件,则从 con- 中绘制一个值 $y_{n+1}$给定$X=x_{n}$,$Y$的二分分布,设$X_{n+1}=x_{n},Y_{n+1}=y_{n+1}$。一遍又一遍地重复步骤 1 和 2,$d$ 维随机向量的链序列的平稳分布。在每个阶段,我们选择要更新的向量的一个分量,并在给定其他分量的最新值的情况下从该分量的条件分布中提取。我们可以按系统顺序循环遍历向量的分量,也可以每次选择一个随机分量进行更新。吉布斯抽样自然地推广到更高的维度。如果我们想从 $d$ 维联合分布中采样,$\left(X_{2}, Y_{2}\right),\ldots$ 是 $p_{X, Y}$。

Gibbs 采样器不如 Metropolis-Hastings 算法灵活,因为我们无法选择提议分布;这也使得它更简单,因为我们不必选择提案分布。 Gibbs 分布的风格,而 Metropolis-Hastings 强调接受概率。但是这些算法是紧密相连的,如下所示。

定理 12.2.3(随机扫描吉布斯作为 Metropolis-Hastings)。随机扫描 Gibbs 采样器是 Metropolis-Hastings 算法的一个特例,在该算法中,提案总是被接受。特别是,随机扫描吉布斯采样器的平稳分布是符合要求的。

证明。我们将在两个维度上展示这一点,但证明在任何维度上都是相似的。令 $X$ 和 $Y$ 是离散的 r.v.s,其联合 PMF 是所需的平稳分布。让我们使用以下建议分布来计算 Metropolis-Hastings 算法所说的内容:从 $(x, y)$,通过运行随机扫描 Gibbs 采样器的一次移动来随机更新一个坐标。

为了简化符号,写

$P(X=x, Y=y)=p(x, y), P(Y=y \mid X=x)=p(y \mid x), P(X=x \mid Y=y) =p(x \mid y) .$

更正式地说,我们应该写成 $p_{Y \mid X}(y \mid x)$ 而不是 $p(y \mid x)$,以避免诸如想知道 $p(5 \mid 3)$ 是什么意思的问题。但是写成 $p(y \mid x)$ 更紧凑,并且不会在这个证明中产生歧义。

让我们计算从 $(x, y)$ 出发的 Metropolis-Hastings 接受概率

统计代写|SAMPLE SPACES AND PEBBLE WORLD stat 代写 请认准UprivateTA™. UprivateTA™为您的留学生涯保驾护航。