统计代写| Metropolis-Hastings stat代写

统计代考

12.1 Metropolis-Hastings

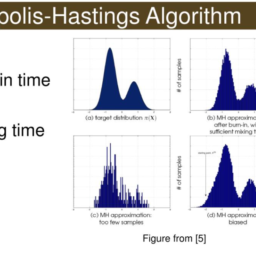

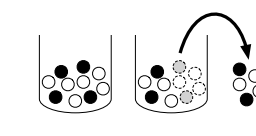

The Metropolis-Hastings algorithm is a general recipe that lets us start with any irreducible Markov chain on the state space of interest and then modify it into a new Markov chain that has the desired stationary distribution. This modification consists of introducing some selectiveness in the original chain: moves are proposed according to the original chain, but the proposal may or may not be accepted. For example, suppose the original chain is at a state called “Boston” and is about to and go to San Francisco, or we turn down the proposal and remain in Boston as

Markov chain Monte Carlo

537 the next step. With a careful choice of the probability of accepting the proposal, this simple modification guarantees that the new chain has the desired stationary distribution.

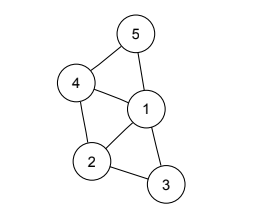

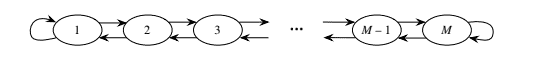

Algorithm 12.1.1 (Metropolis-Hastings). Let s=(s1,…,sM) be a desired stationary distribution on state space 1,…,M. Assume that si>0 for all i (if not, just delete any states i with si=0 from the state space). Suppose that P=(pij) is the transition matrix for a Markov chain on state space 1,…,M. Intuitively, P is a Markov chain that we know how to run but that doesn’t have the desired stationary distribution.

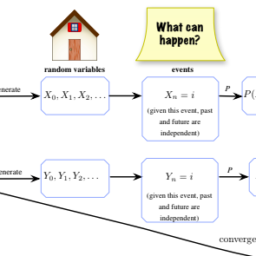

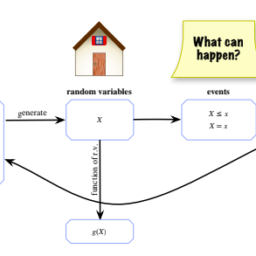

Our goal is to modify P to construct a Markov chain X0,X1,… with stationary distribution s. We will give a Metropolis-Hastings algorithm for this. Start at any state X0 (chosen randomly or deterministically), and suppose that the new chain is currently at Xn. To make one move of the new chain, do the following.

- If Xn=i, propose a new state j using the transition probabilities in the i th row of the original transition matrix P.

- Compute the acceptance probability

aij=min - Flip a coin that lands Heads with probability a_{i j}.

- If the coin lands Heads, accept the proposal (i.e., go to j ), setting X_{n+1}=j. Otherwise, reject the proposal (i.e., stay at i ), setting X_{n+1}=i.

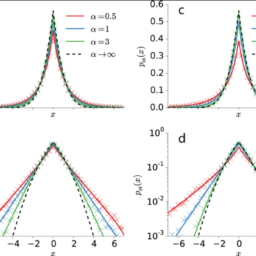

That is, the Metropolis-Hastings chain uses the original transition probabilities p_{i j} to propose where to go next, then accepts the proposal with probability a_{i j}, staying in its current state in the event of a rejection. An especially nice aspect of this algorithm is that the normalizing constant for \mathrm{s} does not need to be known, since it cancels out in s_{j} / s_{i} anyway. For example, in some problems we may want the sta- tionary distribution to be uniform over all states (i.e., s=(1 / M, 1 / M, \ldots, 1 / M)), but the number of states M is large and unknown, and it would be a very hard counting problem to find M. Fortunately, s_{j} / s_{i}=1 regardless of M, so we can simply say \mathbf{s} \propto(1,1, \ldots, 1), and we can calculate a_{i j} without having to know M.

The p_{i j} in the denominator in a_{i j} will never be 0 when the algorithm is run, since if p_{i j}=0 then the original chain will never propose going from i to j. Also, if p_{i i}>0 it is possible that the proposal j will equal the current state i; in that case, the chain stays at i regardless of whether the proposal is accepted. (Rejecting the proposal of “yes, I will stay in my room, but not because you told me to!”)

We will now show that the Metropolis-Hastings chain is reversible with stationary distribution \mathrm{s}.

538

Proof. Let Q be the transition matrix of the Metropolis-Hastings chain. We just need to check the reversibility condition s_{i} q_{i j}=s_{j} q_{j i} for all i and j. This is clear for i=j, so assume i \neq j. If q_{i j}>0, then p_{i j}>0 (the chain can’t get from i to j if it can’t even propose going from i to j ) and p_{j i}>0 (otherwise the acceptance probability would be 0 ). Conversely, if p_{i j}>0 and p_{j i}>0, then q_{j i}>0. So q_{i j} and q_{j i} are either both zero or both nonzero. We can assume they are both nonzero. Then

统计代考

12.1 大都会-黑斯廷斯

Metropolis-Hastings 算法是一个通用配方,它让我们从感兴趣的状态空间上的任何不可约马尔可夫链开始,然后将其修改为具有所需平稳分布的新马尔可夫链。这种修改包括在原始链中引入一些选择性:根据原始链提出移动,但该提议可能会或可能不会被接受。例如,假设原链在一个名为“波士顿”的州,即将前往旧金山,或者我们拒绝该提议并留在波士顿

马尔可夫链蒙特卡洛

537下一步。通过仔细选择接受提议的概率,这个简单的修改保证了新链具有所需的平稳分布。

算法 12.1.1(Metropolis-Hastings)。令 \mathrm{s}=\left(s_{1}, \ldots, s_{M}\right) 是状态空间 {1, \ldots, M} 上的期望平稳分布。 假设所有 i 的 s_{i}>0 (如果没有,只需从状态空间中删除 s_{i}=0 的任何状态 i)。假设 P=\left(p_{ij}\right) 是状态空间 {1, \ldots, M} 上马尔可夫链的转移矩阵。 直观地说,P 是一个马尔可夫链我们知道如何运行,但它没有所需的平稳分布。

我们的目标是修改 P 以构造一个具有平稳分布 s 的马尔可夫链 X_{0}, X_{1}, \ldots。我们将为此给出一个 Metropolis-Hastings 算法。从任何状态 X_{0}(随机或确定性选择)开始,并假设新链当前位于 X_{n}。要移动新链,请执行以下操作。

- 如果X_{n}=i,使用原始转移矩阵P的第i行的转移概率提出一个新的状态j。

2.计算接受概率

a_{i j}=\min \left(\frac{s_{j} p_{j i}}{s_{i} p_{i j}}, 1\right) 。 - 掷硬币,正面朝上的概率为 a_{i j}。

- 如果硬币正面朝上,接受提议(即转到 j ),设置 X_{n+1}=j。否则,拒绝该提议(即,保持在 i ),设置 X_{n+1}=i。

也就是说,Metropolis-Hastings 链使用原始转移概率 p_{ij} 来提议下一步要去哪里,然后以概率 a_{ij} 接受该提议,在被拒绝的情况下保持其当前状态.该算法的一个特别好的方面是不需要知道 \mathrm{s} 的归一化常数,因为它无论如何都会在 s_{j} / s_{i} 中抵消。例如,在某些问题中,我们可能希望平稳分布在所有状态上是均匀的(即 s=(1 / M, 1 / M, \ldots, 1 / M)),但是状态的数量M 很大且未知,要找到 M 将是一个非常困难的计数问题。幸运的是,s_{j} / s_{i}=1 与 M 无关,所以我们可以简单地说 \mathbf{s} \propto(1,1, \ldots, 1),我们可以计算a_{ij} 而不必知道 M。

当算法运行时,a_{ij} 中分母中的 p_{ij} 永远不会为 0,因为如果 p_{ij}=0 那么原始链将永远不会提议从 i 到j。此外,如果 p_{i i}>0,则提案 j 可能等于当前状态 i;在这种情况下,无论提案是否被接受,链都会保持在 i。 (拒绝“是的,我会留在我的房间,但不是因为你告诉我!”的提议)

我们现在将证明 Metropolis-Hastings 链在平稳分布 \mathrm{s} 下是可逆的。

538

证明。令 Q 为 Metropolis-Hastings 链的转移矩阵。我们只需要检查所有i 和j 的可逆性条件s_{i} q_{i j}=s_{j} q_{j i}。这对于 i=j 是很清楚的,所以假设 i \neq j。如果 q_{ij}>0,则 p_{ij}>0(如果链甚至不能提议从 i 到 j,链就不能从 i 到 j ) 和 p_{ji}>0 (否则接受概率将为 0 )。反之,如果p_{i j}>0且p_{j i}>0,则q_{j i}>0。所以 q_{i j} 和 q_{j i} 要么都是零,要么都是非零。我们可以假设它们都是非零的。然后

R语言代写

统计代写|SAMPLE SPACES AND PEBBLE WORLD stat 代写 请认准UprivateTA™. UprivateTA™为您的留学生涯保驾护航。