MY-ASSIGNMENTEXPERT™可以为您提供catalog.drexel.edu ECET602 information theory course信息论课程的代写代考和辅导服务!

ECET602课程简介

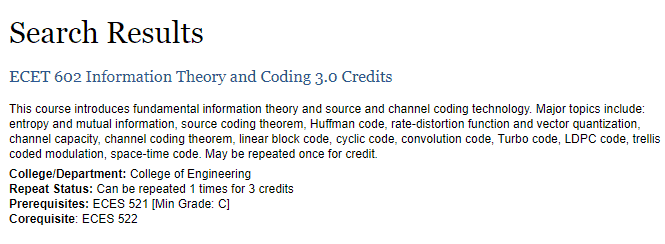

This course introduces fundamental information theory and source and channel coding technology. Major topics include: entropy and mutual information, source coding theorem, Huffman code, rate-distortion function and vector quantization, channel capacity, channel coding theorem, linear block code, cyclic code, convolution code, Turbo code, LDPC code, trellis coded modulation, space-time code. May be repeated once for credit.

Prerequisites

College/Department: College of Engineering

Repeat Status: Can be repeated 1 times for 3 credits

Prerequisites: ECES 521 Min Grade: C

Corequisite: ECES 522

ECET602 information theory course HELP(EXAM HELP, ONLINE TUTOR)

Problem 1 (Alternative definition of Unique decodability) An $f: \mathcal{X} \rightarrow \mathcal{Y}^*$ code is called uniquely decodable if for any messages $\mathbf{u}=u_1 \cdots u_k$ and $\mathbf{v}=v_1 \cdots v_k\left(\right.$ where $u_1, v_i, \ldots, u_k, v_k \in \mathcal{X}$ ) with

$$

f\left(u_1\right) f\left(u_2\right) \cdots f\left(u_k\right)=f\left(v_1\right) f\left(v_2\right) \cdots f\left(v_k\right)

$$

we have $u_i=v_i$ for all $i$. That is, as opposed to the definition given in class, we require that the codes of any pair of messages with the same length are equal. Prove that the two definitions are equivalent.

To prove the equivalence of the two definitions, we need to show that a code is uniquely decodable according to one definition if and only if it is uniquely decodable according to the other definition.

First, let’s assume that a code is uniquely decodable according to the definition given in class, i.e., for any pair of distinct messages $\mathbf{u}$ and $\mathbf{v}$, their encoded strings are distinct. We will show that the code is also uniquely decodable according to the alternative definition.

Suppose that there are two messages $\mathbf{u}=u_1\cdots u_k$ and $\mathbf{v}=v_1\cdots v_k$ with $f(\mathbf{u})=f(\mathbf{v})$, where $f(\mathbf{x})$ denotes the encoding of message $\mathbf{x}$ according to the code $f$. We need to show that $\mathbf{u}=\mathbf{v}$.

Since $f(\mathbf{u})=f(\mathbf{v})$, we have $f(u_1)\cdots f(u_k)=f(v_1)\cdots f(v_k)$. But since the code is uniquely decodable according to the original definition, we know that $u_1\cdots u_k$ and $v_1\cdots v_k$ must be the same message (otherwise their encoded strings would be distinct). Therefore, we have $\mathbf{u}=\mathbf{v}$, as desired.

Next, let’s assume that a code is uniquely decodable according to the alternative definition, i.e., for any pair of messages $\mathbf{u}$ and $\mathbf{v}$ with the same length, their encoded strings are distinct. We will show that the code is also uniquely decodable according to the original definition.

Suppose that there are two distinct messages $\mathbf{u}=u_1\cdots u_k$ and $\mathbf{v}=v_1\cdots v_k$ with $f(\mathbf{u})=f(\mathbf{v})$. We need to show that their encoded strings are not the same.

Since $\$ \backslash$ mathbf ${u} \$$ and $\$ \backslash$ mathbf ${v} \$$ are distinct, there must be some index $\$ i \$$ such that \$u_i $\backslash$ neq v i $\$$. Without loss of generality, we can assume that $\$ u_{-} iv_{-} i \$$ ). Let $\$ \backslash$ mathbf ${w}=u_{-} 1 \backslash c d o t s ~ u _{i-1} v _i u _{i+1} \backslash c d o t s ~ u _k \$$, which is a message of the same length as $\$ \backslash$ mathbf ${u} \$$ and $\$ \backslash$ mathbf ${v} \$$. Then we have:

$$

f(\mathbf{w})=f\left(u_1\right) \cdots f\left(u_{i-1}\right) f\left(v_i\right) f\left(u_{i+1}\right) \cdots f\left(u_k\right)

$$

But since $\$ f(\backslash$ mathbf ${u})=f(\backslash$ mathbf ${v}) \$$, we also have:

$$

f(\mathbf{w})=f\left(u_1\right) \cdots f\left(u_{i-1}\right) f\left(u_i\right) f\left(u_{i+1}\right) \cdots f\left(u_k\right)

$$

Since $u_i<v_i$, we know that $f(u_i)$ appears before $f(v_i)$ in the encoding of any message of the same length as $\mathbf{u}$ and $\mathbf{v}$. Therefore, the encoded string of $\mathbf{w}$ must be distinct from the encoded strings of both $\mathbf

Problem 2 (Average LENGTH Of THE OPtimal CODE) Show that the expected length of the codewords of the optimal binary code may be arbitrarily close to $H(X)+1$. More precisely, for any small $\epsilon>0$, construct a distribution on the source alphabet $\mathcal{X}$ such that the average codeword length of the optimal binary code satisfies

$$

\mathbf{E}|f(X)|>H(X)+1-\epsilon

$$

Let $p_1,p_2,\ldots,p_n$ be the probabilities of the symbols in the alphabet $\mathcal{X}$. Without loss of generality, we can assume that $p_1 \geq p_2 \geq \cdots \geq p_n$.

Let $k$ be a positive integer such that $2^k \geq n$. Define a new distribution on $\mathcal{X}$ as follows: for each $i=1,2,\ldots,n$, divide the interval $[0,1]$ into $2^k$ subintervals of length $p_i/2^k$, and assign each subinterval to the symbol $x_i$. Then the probability of each subinterval is $p_i/2^k$, and hence the probability of each symbol is still $p_i$.

Consider an optimal binary code for this new distribution. Note that each symbol $x_i$ is assigned to a binary codeword of length $k$, which corresponds to a path in the binary tree of depth $k$. Moreover, these paths are disjoint and cover the entire binary tree. Therefore, the average codeword length of this binary code is equal to the average path length in the binary tree, which is the depth of the tree.

Now, observe that the depth of the binary tree is at least $\log_2 n$. To see this, note that a binary tree of depth $d$ can have at most $2^d$ leaves, and hence can cover at most $2^d$ subintervals. Since we have $n$ subintervals, it follows that the depth of the binary tree must be at least $\log_2 n$.

On the other hand, the depth of the binary tree is at most $\$ \mathrm{k} \$$, which is equal to $\$ \backslash$ ceil $\backslash \log {-} 2 \mathrm{n} \backslash$ rceil\$. Therefore, we have: $$ \mathbf{E}|f(X)| \geq \frac{1}{n} \sum{i=1}^n\left|f\left(x_i\right)\right| \geq \frac{1}{n} \cdot k \geq \frac{1}{n} \cdot\left\lceil\log _2 n\right\rceil \geq \log _2 n-1

$$

where the first inequality follows from the definition of the average codeword length, the second inequality follows from the fact that each symbol is assigned a codeword of length $k$, and the last inequality follows from the fact that $\lceil \log_2 n \rceil \leq \log_2 n + 1$.

Now, let $\$ \backslash$ epsilon $>0 \$$ be a small constant. We can choose $\$ n \$$ to be a large enough power of $\$ 2 \$$ such that $\$ \backslash \log _{-} 2 \mathrm{n}>\mathrm{H}(\mathrm{X})$ + lepsilon $\$$. Then, using the above inequality, we have:

$$

\mathbf{E}|f(X)|>\log _2 n-1>H(X)+\epsilon-1>H(X)+1-\epsilon

$$

Therefore, we have constructed a distribution on $\mathcal{X}$ such that the average codeword length of the optimal binary code is arbitrarily close to $H(X) + 1$.

MY-ASSIGNMENTEXPERT™可以为您提供UNIVERSITY OF ILLINOIS URBANA-CHAMPAIGN MATH2940 linear algebra线性代数课程的代写代考和辅导服务! 请认准MY-ASSIGNMENTEXPERT™. MY-ASSIGNMENTEXPERT™为您的留学生涯保驾护航。