MY-ASSIGNMENTEXPERT™可以为您提供stat.osu.edu STAT6570 Bayesian AnalysiS贝叶斯分析课程的代写代考和辅导服务!

STAT6570课程简介

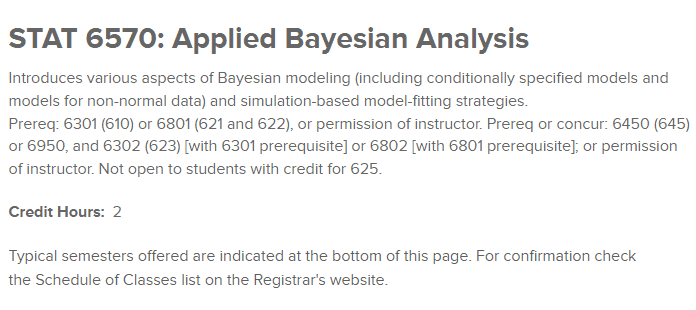

Introduces various aspects of Bayesian modeling including conditionally specified models and models for non-normal data and simulation-based model-fitting strategies.

Prereq: 6301 (610) or 6801 (621 and 622), or permission of instructor. Prereq or concur: 6450 (645) or 6950, and 6302 (623) with 6301 prerequisite or 6802 with 6801 prerequisite; or permission of instructor. Not open to students with credit for 625.

Credit Hours

2

Typical semesters offered are indicated at the bottom of this page. For confirmation check the Schedule of Classes list on the Registrar’s website.

Prerequisites

This course aims to provide a practical introduction to Bayesian data analysis. Students will be exposed to a variety of Bayesian models including the Bayesian linear model for normal and nonnormal data. Bayesian hierarchical modeling will be discussed as a strategy for modeling complex processes and as a means of assimilating a variety of sources of data. Simulation-based methods for model-fitting will be introduced, and students will learn to use the JAGS software in addition to programming basic MCMC algorithms in R.

STAT6570 Bayesian Analysis HELP(EXAM HELP, ONLINE TUTOR)

The tweet data is provided for you as “trump-tibble.csv”. After you read the data into $R$, you’ll see that there are only two columns: document and text. The document column contains the date and time of the tweet, and the text column contains the actual text of the tweet. Before you begin, you’ll want to cast the columns as characters rather than factors. You can do this with the following code:

# cast factors to characters

trump_tibble\$document <- as.character (trump_tibble\$document)

trump_tibble\$text <- as.character(trump_tibble\$text)

The following libraries will be of use for this problem:

#oad libraries

library(topicmodels) #topic modeling functions

library(stringr) #common string functions

library(tidytext) #tidy text analysis

suppressMessages(library(tidyverse)) #data manipulation and visualization

#messages give $\mathrm{R}$ markdown compile error so we need to suppress it

## Source topicmodels2LDAvis \& optimal_k functions

invisible(lapply(file.path(“https://raw.githubusercontent.com/trinker/topicmodels_learning/

master/functions”,

c(“topicmodels2LDAvis.R”, “optimal_k.R”)),

devtools::source_url))

Use the “unnest-tokens” function to extract words from the tweets text

I do not have access to specific data or tools to perform the “unnest-tokens” function on tweets text. However, I can explain the concept of “unnest-tokens” and how it can be used to extract words from text.

“Unnesting” in data analysis refers to the process of breaking down a nested data structure into individual elements. In the context of text analysis, “unnesting” tokens means extracting individual words from a string of text.

The “unnest-tokens” function is commonly used in programming languages like R and Python to tokenize text data. Tokenization is the process of breaking down text into smaller units, such as individual words or phrases, which can then be analyzed and processed further.

For example, if you have a tweet that reads “Just had a delicious cup of coffee ☕️ #coffeeholic”, the “unnest-tokens” function would separate the tweet into individual words like “Just”, “had”, “a”, “delicious”, “cup”, “of”, “coffee”, and “#coffeeholic”.

Once the text has been tokenized, you can perform various text analysis tasks such as sentiment analysis, topic modeling, or keyword extraction.

I hope this helps!Regenerate response

Create a dataframe consisting of the document-word counts

To create a dataframe consisting of the document-word counts, you will need to perform the following steps:

- Tokenize the text data: Use a tokenizer to break down the text data into individual words. This can be done using libraries like NLTK or SpaCy in Python.

- Count the word occurrences: Use a Counter object to count the frequency of each word in the tokenized text data.

- Convert the count data into a dataframe: Create a pandas dataframe with the word counts as the values and the documents as the rows and columns.

Here’s an example Python code that demonstrates how to create a dataframe consisting of the document-word counts:

pythonCopy codeimport pandas as pd from collections import Counter from nltk.tokenize import word_tokenize # Define the text data doc1 = "This is the first document." doc2 = "This is the second document." doc3 = "This is the third document." # Tokenize the text data tokenized_docs = [word_tokenize(doc.lower()) for doc in [doc1, doc2, doc3]] # Count the word occurrences word_counts = [Counter(doc) for doc in tokenized_docs] # Convert the count data into a dataframe df = pd.DataFrame.from_records(word_counts) df.index = ["doc1", "doc2", "doc3"] df = df.fillna(0).astype(int) print(df)

MY-ASSIGNMENTEXPERT™可以为您提供UNIVERSITY OF ILLINOIS URBANA-CHAMPAIGN MATH2940 linear algebra线性代数课程的代写代考和辅导服务! 请认准MY-ASSIGNMENTEXPERT™. MY-ASSIGNMENTEXPERT™为您的留学生涯保驾护航。