MY-ASSIGNMENTEXPERT™可以为您提供torontomu PCS810 Complex Networks复杂网络课程的代写代考和辅导服务!

这是怀雅逊大学 复杂网络课程的代写成功案例。

PCS810课程简介

An introduction to the emerging science of networks, with applications to biology, social science, engineering, and other fields. Students will learn about the field’s origins in graph theory, and the surprising properties of real-world networks such as the small-world effect. They will also learn to analyze the rich structure present in networks through degree correlations, communities, and motifs. Finally, it will discuss how networks shape the spread of large-scale failures like power blackouts and epidemics.

Prerequisites

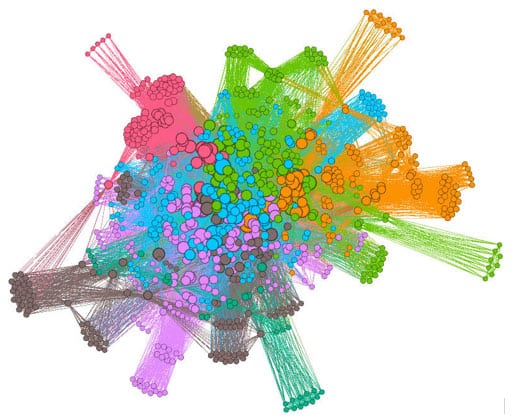

Most social, biological, and technological networks display substantial non-trivial topological features, with patterns of connection between their elements that are neither purely regular nor purely random. Such features include a heavy tail in the degree distribution, a high clustering coefficient, assortativity or disassortativity among vertices, community structure, and hierarchical structure.

PCS810 Complex Networks HELP(EXAM HELP, ONLINE TUTOR)

World Series. The World Series is a seven-game series that terminates as soon as either team wins four games. Let $X$ be the random variable that represents the outcome of a World Series between teams $A$ and B; possible values of $X$ are AAAA, BABABAB, and BBBAAAA. Let $Y$ be the number of games played, which ranges from 4 to 7 . Assuming that $\mathrm{A}$ and $\mathrm{B}$ are equally matched and that the games are independent, calculate $H(X), H(Y), H(Y \mid X)$, and $H(X \mid Y)$.

Solution:

World Series. Two teams play until one of them has won 4 games.

There are 2 (AAAA, BBBB) World Series with 4 games. Each happens with probability $(1 / 2)^4$.

There are $8=2\left(\begin{array}{l}4 \ 3\end{array}\right)$ World Series with 5 games. Each happens with probability $(1 / 2)^5$.

There are $20=2\left(\begin{array}{l}5 \ 3\end{array}\right)$ World Series with 6 games. Each happens with probability $(1 / 2)^6$.

There are $40=2\left(\begin{array}{l}6 \ 3\end{array}\right)$ World Series with 7 games. Each happens with probability $(1 / 2)^7$.

The probability of a 4 game series $(Y=4)$ is $2(1 / 2)^4=1 / 8$.

The probability of a 5 game series $(Y=5)$ is $8(1 / 2)^5=1 / 4$.

The probability of a 6 game series $(Y=6)$ is $20(1 / 2)^6=5 / 16$.

The probability of a 7 game series $(Y=7)$ is $40(1 / 2)^7=5 / 16$.

\begin{aligned}

H(X) & =\sum p(x) \log \frac{1}{p(x)} \

& =2(1 / 16) \log 16+8(1 / 32) \log 32+20(1 / 64) \log 64+40(1 / 128) \log 128 \

& =5.8125

\end{aligned}

$$

\begin{aligned}

H(Y) & =\sum p(y) \log \frac{1}{p(y)} \

& =1 / 8 \log 8+1 / 4 \log 4+5 / 16 \log (16 / 5)+5 / 16 \log (16 / 5) \

& =1.924

\end{aligned}

$$

$\mathrm{Y}$ is a deterministic function of $\mathrm{X}$, so if you know $\mathrm{X}$ there is no randomness in $\mathrm{Y}$. Or, $H(Y \mid X)=0$.

Since $H(X)+H(Y \mid X)=H(X, Y)=H(Y)+H(X \mid Y)$, it is easy to determine $H(X \mid Y)=H(X)+H(Y \mid X)-H(Y)=3.889$

Data processing. Let $X_1 \rightarrow X_2 \rightarrow X_3 \rightarrow \cdots \rightarrow X_n$ form a Markov chain in this order; i.e., let

$$

p\left(x_1, x_2, \ldots, x_n\right)=p\left(x_1\right) p\left(x_2 \mid x_1\right) \cdots p\left(x_n \mid x_{n-1}\right) .

$$

Reduce $I\left(X_1 ; X_2, \ldots, X_n\right)$ to its simplest form.

Solution: Data Processing. By the chain rule for mutual information,

$$

I\left(X_1 ; X_2, \ldots, X_n\right)=I\left(X_1 ; X_2\right)+I\left(X_1 ; X_3 \mid X_2\right)+\cdots+I\left(X_1 ; X_n \mid X_2, \ldots, X_{n-2}\right) \text {. }

$$

By the Markov property, the past and the future are conditionally independent given the present and hence all terms except the first are zero. Therefore

$$

I\left(X_1 ; X_2, \ldots, X_n\right)=I\left(X_1 ; X_2\right) .

$$

Bottleneck. Suppose a (non-stationary) Markov chain starts in one of $n$ states, necks down to $kk$ states. Thus $X_1 \rightarrow X_2 \rightarrow X_3$, $X_1 \in{1,2, \ldots, n}, X_2 \in{1,2, \ldots, k}, X_3 \in{1,2, \ldots, m}$.

(a) Show that the dependence of $X_1$ and $X_3$ is limited by the bottleneck by proving that $I\left(X_1 ; X_3\right) \leq \log k$.

(b) Evaluate $I\left(X_1 ; X_3\right)$ for $k=1$, and conclude that no dependence can survive such a bottleneck.

Solution:

Bottleneck.

(a) From the data processing inequality, and the fact that entropy is maximum for a uniform distribution, we get

$$

\begin{aligned}

I\left(X_1 ; X_3\right) & \leq I\left(X_1 ; X_2\right) \

& =H\left(X_2\right)-H\left(X_2 \mid X_1\right) \

& \leq H\left(X_2\right) \

& \leq \log k .

\end{aligned}

$$

Thus, the dependence between $X_1$ and $X_3$ is limited by the size of the bottleneck. That is $I\left(X_1 ; X_3\right) \leq \log k$.

(b) For $k=1, I\left(X_1 ; X_3\right) \leq \log 1=0$ and since $I\left(X_1, X_3\right) \geq 0, I\left(X_1, X_3\right)=0$. Thus, for $k=1, X_1$ and $X_3$ are independent.

Recall that,

$$

-\sum_{i=0}^{\infty} p_i \log p_i \leq-\sum_{i=0}^{\infty} p_i \log q_i .

$$

Let $q_i=\alpha(\beta)^i$. Then we have that,

$$

\begin{aligned}

-\sum_{i=0}^{\infty} p_i \log p_i & \leq-\sum_{i=0}^{\infty} p_i \log q_i \

& =-\left(\log (\alpha) \sum_{i=0}^{\infty} p_i+\log (\beta) \sum_{i=0}^{\infty} i p_i\right) \

& =-\log \alpha-A \log \beta

\end{aligned}

$$

Notice that the final right hand side expression is independent of $\left{p_1\right}$, and that the inequality,

$$

-\sum_{i=0}^{\infty} p_i \log p_i \leq-\log \alpha-A \log \beta

$$

holds for all $\alpha, \beta$ such that,

$$

\sum_{i=0}^{\infty} \alpha \beta^i=1=\alpha \frac{1}{1-\beta} .

$$

The constraint on the expected value also requires that,

$$

\sum_{i=0}^{\infty} i \alpha \beta^i=A=\alpha \frac{\beta}{(1-\beta)^2} .

$$

Combining the two constraints we have,

$$

\begin{aligned}

\alpha \frac{\beta}{(1-\beta)^2} & =\left(\frac{\alpha}{1-\beta}\right)\left(\frac{\beta}{1-\beta}\right) \

& =\frac{\beta}{1-\beta} \

& =A,

\end{aligned}

$$

which implies that,

$$

\begin{aligned}

& \beta=\frac{A}{A+1} \

& \alpha=\frac{1}{A+1} .

\end{aligned}

$$

So the entropy maximizing distribution is,

$$

p_i=\frac{1}{A+1}\left(\frac{A}{A+1}\right)^i .

$$

Plugging these values into the expression for the maximum entropy,

$$

-\log \alpha-A \log \beta=(A+1) \log (A+1)-A \log A .

$$

The general form of the distribution,

$$

p_i=\alpha \beta^i

$$

can be obtained either by guessing or by Lagrange multipliers where,

$$

F\left(p_i, \lambda_1, \lambda_2\right)=-\sum_{i=0}^{\infty} p_i \log p_i+\lambda_1\left(\sum_{i=0}^{\infty} p_i-1\right)+\lambda_2\left(\sum_{i=0}^{\infty} i p_i-A\right)

$$

is the function whose gradient we set to 0 .

Many of you used Lagrange multipliers, but failed to argue that the result obtained is a global maximum. An argument similar to the above should have been used. On the other hand one could simply argue that since $-H(p)$ is convex, it has only one local minima, no local maxima and therefore Lagrange multiplier actually gives the global maximum for $H(p)$.

MY-ASSIGNMENTEXPERT™可以为您提供torontomu PCS810 Complex Networks复杂网络课程的代写代考和辅导服务!