如果你也在 怎样代写优化方法Optimization这个学科遇到相关的难题,请随时右上角联系我们的24/7代写客服。优化方法Optimization又称优化)或数学编程是指从一组可用的备选方案中选择一个最佳元素。从计算机科学和工程[到运筹学和经济学的所有定量学科中都会出现各种优化问题,几个世纪以来,数学界一直在关注解决方法的发展。

优化方法Optimization在最简单的情况下,优化问题包括通过系统地从一个允许的集合中选择输入值并计算出函数的值来最大化或最小化一个实际函数。将优化理论和技术推广到其他形式,构成了应用数学的一个大领域。更一般地说,优化包括在给定的域(或输入)中寻找一些目标函数的 “最佳可用 “值,包括各种不同类型的目标函数和不同类型的域。非凸全局优化的一般问题是NP-完备的,可接受的深层局部最小值是用遗传算法(GA)、粒子群优化(PSO)和模拟退火(SA)等启发式方法来寻找的。

my-assignmentexpert™ 优化方法Optimization作业代写,免费提交作业要求, 满意后付款,成绩80\%以下全额退款,安全省心无顾虑。专业硕 博写手团队,所有订单可靠准时,保证 100% 原创。my-assignmentexpert™, 最高质量的优化方法Optimization作业代写,服务覆盖北美、欧洲、澳洲等 国家。 在代写价格方面,考虑到同学们的经济条件,在保障代写质量的前提下,我们为客户提供最合理的价格。 由于统计Statistics作业种类很多,同时其中的大部分作业在字数上都没有具体要求,因此优化方法Optimization作业代写的价格不固定。通常在经济学专家查看完作业要求之后会给出报价。作业难度和截止日期对价格也有很大的影响。

想知道您作业确定的价格吗? 免费下单以相关学科的专家能了解具体的要求之后在1-3个小时就提出价格。专家的 报价比上列的价格能便宜好几倍。

my-assignmentexpert™ 为您的留学生涯保驾护航 在数学Mathematics作业代写方面已经树立了自己的口碑, 保证靠谱, 高质且原创的优化方法Optimization代写服务。我们的专家在数学Mathematics代写方面经验极为丰富,各种优化方法Optimization相关的作业也就用不着 说。

我们提供的优化方法Optimization及其相关学科的代写,服务范围广, 其中包括但不限于:

调和函数 harmonic function

椭圆方程 elliptic equation

抛物方程 Parabolic equation

双曲方程 Hyperbolic equation

非线性方法 nonlinear method

变分法 Calculus of Variations

几何分析 geometric analysis

偏微分方程数值解 Numerical solution of partial differential equations

数学代写|优化方法作业代写Optimization代考|Transformation Method: Differentiation by Using an Integral Transformation

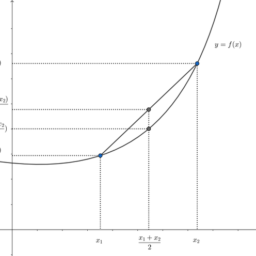

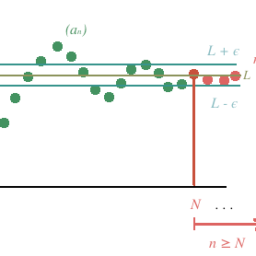

In many important cases integral formulas for the first and also higher order derivatives of probability functions and parameter-dependent integrals can be obtained by using a certain integral transformation.

The basic principle is demonstrated now in case of the important probability functions

$$

\begin{aligned}

P(x) &:=\mathcal{P}(A(\omega) x \leq b(\omega)) \

P_{i}(x) &:=\mathcal{P}\left(A_{i}(\omega) x \leq b_{i}(\omega)\right), i=1, \ldots, m

\end{aligned}

$$

occuring in the chance constrained programming approach to linear programs with random data $[51,52,69]$

Here, $(A, b)=(A(\omega), b(\omega))$ denotes a random $m \times(r+1)$-matrix with rows $\left(A_{i}, b_{i}\right), i=1, \ldots, m$, having a known probability distribution. Obviously, setting in (3.2)

$$

\begin{aligned}

y(a, x) &:=A x-b \text { with } a:=(A, b), \

y_{l i} &:=-\infty \text { with ” }<” \text { in the left inequality, } \

y_{u i} &:=0 \text { with ” } \leq ” \text { in the right inequality }

\end{aligned}

$$

$P(x), P_{i}(x)$ defined by $(3.6 \mathrm{a}),(3.6 \mathrm{~b})$, resp., can be represented by (3.1a), (3.1b). Suppose that the random matrix $(A(\omega),(\omega))$ has a probability density

$$

f=f(A, b)=f\left(a_{1}, \ldots, a_{r}, b\right),

$$

where $a_{k}$ denotes the $k$-th column of $A$. We find

$$

\begin{aligned}

P(x)=& \int f_{i}\left(a_{i 1} m \ldots, a_{i r}, b_{i}\right) \prod_{k=1}^{r} d a_{i k} d b_{i} . \

& \sum_{k=1}^{r} a_{k} x_{k} \leq b

\end{aligned}

$$

Furthermore, $P_{i}(x), i=1, \ldots, m$, can be represented by

$$

\begin{aligned}

P_{i}(x)=& \int f_{i}\left(a_{i 1}, \ldots, a_{i r}, b_{i}\right) \prod_{k=1}^{r} d c_{i k} \

& \sum_{k=1}^{r} a_{i k} x_{k} \leq b_{i}

\end{aligned}

$$

where

$$

f_{i}=f_{i}\left(A_{i}, b_{i}\right)=f_{i}\left(a_{i 1}, \ldots, a_{i r}, b_{i}\right)

$$

designates the (marginal) density of the random (row) vector $\left(A_{i}(\omega), b_{i}(\omega)\right)$. Assume that the integrals in (3.8a) and (3.8b) exist and are finite for each $x$ under consideration.

数学代写|优化方法作业代写Optimization代考|REPRESENTATION OF THE DERIVATIVES BY SURFACE INTEGRALS

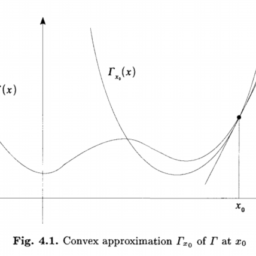

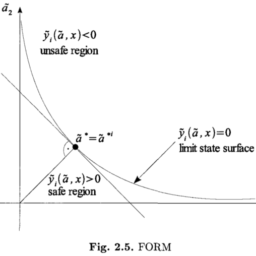

Using the Gaussian divergence theorem [144], we obtain integral representations of $\frac{\partial P}{\partial x_{k}}, \frac{\partial P_{i}}{\partial x_{k}}$ having integrands without involving derivatives of the densities $f, f_{i}$ which is more advantageous in some cases, see Section 3.5.

We consider first the simpler case (3.13b). For given $x$, fulfilling the assumptions of Corollary $3.1, \frac{\partial P_{i}}{\partial x_{k}}$ can be represented by

$$

\frac{\partial P_{i}}{\partial x_{k}}(x)=-\frac{1}{x_{k}} \int_{B_{i}(x)} \operatorname{div} v^{(i, k)}\left(A_{i}, b_{i}\right) d A_{i} d b_{i},

$$

where $B_{i}(x):=\left{\left(A_{i}, b_{i}\right) \in \mathbb{R}^{r+1}:\left(A_{i}, b_{i}\right) \hat{x} \leq 0\right}$ with $\hat{x}:=\left(\begin{array}{c}x \ -1\end{array}\right)$, and the $r$-vector field $v^{(i, k)}=v^{(i, k)}\left(A_{i}, b_{i}\right)$ is defined by

$$

v_{j}^{(i, k)}\left(A_{i}, b_{i}\right):= \begin{cases}a_{i k} f_{i}\left(A_{i}, b_{i}\right), & j=k \ 0 & , j \neq k\end{cases}

$$

优化方法代写

数学代写|优化方法作业代写OPTIMIZATION代考|TRANSFORMATION METHOD: DIFFERENTIATION BY USING AN INTEGRAL TRANSFORMATION

在许多重要情况下,概率函数和参数相关积分的一阶和高阶导数的积分公式可以通过使用一定的积分变换来获得。

现在在重要概率函数的情况下演示基本原理

磷(X):=磷(一种(ω)X≤b(ω)) 磷一世(X):=磷(一种一世(ω)X≤b一世(ω)),一世=1,…,米

发生在随机数据线性规划的机会约束规划方法中[51,52,69]

这里,(一种,b)=(一种(ω),b(ω))表示随机米×(r+1)- 行矩阵(一种一世,b一世),一世=1,…,米,具有已知的概率分布。显然,设置3.2$$

\begin{aligned}

y(a, x) &:=A x-b \text { with } a:=(A, b), \

y_{l i} &:=-\infty \text { with ” }<” \text { in the left inequality, } \

y_{u i} &:=0 \text { with ” } \leq ” \text { in the right inequality }

\end{aligned}

$$

$P(x), P_{i}(x)$ defined by $(3.6 \mathrm{a}),(3.6 \mathrm{~b})$, resp., can be represented by (3.1a), (3.1b). Suppose that the random matrix $(A(\omega),(\omega))$ has a probability density

$$

f=f(A, b)=f\left(a_{1}, \ldots, a_{r}, b\right),

$$

where $a_{k}$ denotes the $k$-th column of $A$. We find

$$

\begin{aligned}

P(x)=& \int f_{i}\left(a_{i 1} m \ldots, a_{i r}, b_{i}\right) \prod_{k=1}^{r} d a_{i k} d b_{i} . \

& \sum_{k=1}^{r} a_{k} x_{k} \leq b

\end{aligned}

$$

Furthermore, $P_{i}(x), i=1, \ldots, m$, can be represented by

$$

\begin{aligned}

P_{i}(x)=& \int f_{i}\left(a_{i 1}, \ldots, a_{i r}, b_{i}\right) \prod_{k=1}^{r} d c_{i k} \

& \sum_{k=1}^{r} a_{i k} x_{k} \leq b_{i}

\end{aligned}

$$

where

$$

f_{i}=f_{i}\left(A_{i}, b_{i}\right)=f_{i}\left(a_{i 1}, \ldots, a_{i r}, b_{i}\right)

$$

designates the (marginal) density of the random (row) vector $\left(A_{i}(\omega), b_{i}(\omega)\right)$.. 假设积分在3.8一种和3.8b存在并且对于每个都是有限的X在考虑中。

数学代写|优化方法作业代写OPTIMIZATION代考|REPRESENTATION OF THE DERIVATIVES BY SURFACE INTEGRALS

使用高斯散度定理144,我们得到的积分表示∂磷∂Xķ,∂磷一世∂Xķ具有不涉及密度导数的被积函数F,F一世这在某些情况下更有利,请参见第 3.5 节。

我们首先考虑更简单的情况3.13b. 对于给定的X,满足推论的假设3.1,∂磷一世∂Xķ可以表示为\frac{\partial P_{i}}{\partial x_{k}}(x)=-\frac{1}{x_{k}} \int_{B_{i}(x)} \operatorname{div} v^{(i, k)}\left(A_{i}, b_{i}\right) d A_{i} d b_{i},

$$

where $B_{i}(x):=\left{\left(A_{i}, b_{i}\right) \in \mathbb{R}^{r+1}:\left(A_{i}, b_{i}\right) \hat{x} \leq 0\right}$ with $\hat{x}:=\left(\begin{array}{c}x \ -1\end{array}\right)$, and the $r$-vector field $v^{(i, k)}=v^{(i, k)}\left(A_{i}, b_{i}\right)$ is defined by

$$

v_{j}^{(i, k)}\left(A_{i}, b_{i}\right):= \begin{cases}a_{i k} f_{i}\left(A_{i}, b_{i}\right), & j=k \ 0 & , j \neq k\end{cases}

$$

数学代写|优化方法作业代写Optimization代考 请认准UprivateTA™. UprivateTA™为您的留学生涯保驾护航。