统计代写| Independence of r.v.s stat代写

统计代考

$3.8$ Independence of r.v.s

Just as we had the notion of independence of events, we can define independence of random variables. Intuitively, if two r.v.s $X$ and $Y$ are independent, then knowing the value of $X$ gives no information about the value of $Y$, and vice versa. The definition formalizes this idea.

Definition 3.8.1 (Independence of two r.v.s). Random variables $X$ and $Y$ are said

to be independent if

$$

P(X \leq x, Y \leq y)=P(X \leq x) P(Y \leq y)

$$

for all $x, y \in \mathbb{R}$.

In the discrete case, this is equivalent to the condition

$$

P(X=x, Y=y)=P(X=x) P(Y=y),

$$

for all $x, y$ with $x$ in the support of $X$ and $y$ in the support of $Y$.

The definition for more than two r.v.s is analogous.

Definition 3.8.2 (Independence of many r.v.s). Random variables $X_{1}, \ldots, X_{n}$ are independent if independent if

$$

P\left(X_{1} \leq x_{1}, \ldots, X_{n} \leq x_{n}\right)=P\left(X_{1} \leq x_{1}\right) \ldots P\left(X_{n} \leq x_{n}\right)

$$

for all $x_{1}, \ldots, x_{n} \in \mathbb{R}$. For infinitely many r.v.s, we say that they are independent if every finite subset of the r.v.s is independent.

Comparing this to the criteria for independence of $n$ events, it may seem strange that the independence of $X_{1}, \ldots, X_{n}$ requires just one equality, whereas for events we needed to verify pairwise independence for all $\left(\begin{array}{l}n \ 2\end{array}\right)$ pairs, three-way independence for all (\begin{array}{l } $&{3 \text { ) triplets, and so on. However, upon closer examination of the definition, we see }} \end{array}$ infinitely many conditions! If we can find even a single list of values $x_{1}, \ldots, x_{n}$ for which the above equality fails to hold, then $X_{1}, \ldots, X_{n}$ are not independent.

3.8.3. If $X_{1}, \ldots, X_{n}$ are independent, then they are pairwise independent, i.e., $X_{i}$ is independent of $X_{j}$ for $i \neq j$. The idea behind proving that $X_{i}$ and $X_{j}$ are independent is to let all the $x_{k}$ other than $x_{i}, x_{j}$ go to $\infty$ in the definition of independence, since we already know $X_{k}<\infty$ is true (though it takes some work to give a complete justification for the limit). But pairwise independenter 2 for events. independence in general, as we saw in Chapter

Example 3.8.4. In a roll of two fair dice, if $X$ is the number on the first die and $Y$ is the number on the second die, then $X+Y$ is not independent of $X-Y$ since

$0=P(X+Y=12, X-Y=1) \neq P(X+Y=12) P(X-Y=1)=\frac{1}{36} \cdot \frac{5}{36}$

Knowing the total is 12 tells us the difference must be 0, so the r.v.s provide information about each other.

If $X$ and $Y$ are independent then it is also true, e.g., that $X^{2}$ is independent of $Y^{4}$, since if $X^{2}$ provided information about $Y^{4}$, then $X$ would give information about $Y$ (using $X^{2}$ and $Y^{4}$ as intermediaries: $X$ determines $X^{2}$, which would give information about $Y^{4}$, which in turn would give information about $Y$ ). More generally, we have the following result (for which we omit a formal proof).

Theorem 3.8.5 (Functions of independent r.v.s). If $X$ and $Y$ are independent r.v.s, then any function of $X$ is independent of any function of $Y$.

Definition 3.8.6 (i.i.d.). We will often work with random variables that are independent and have the same distribution. We call such r.v.s independent and identically distributed, or i.i.d. for short.

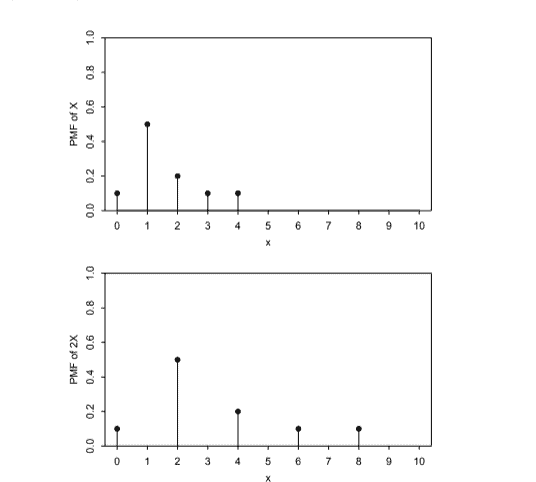

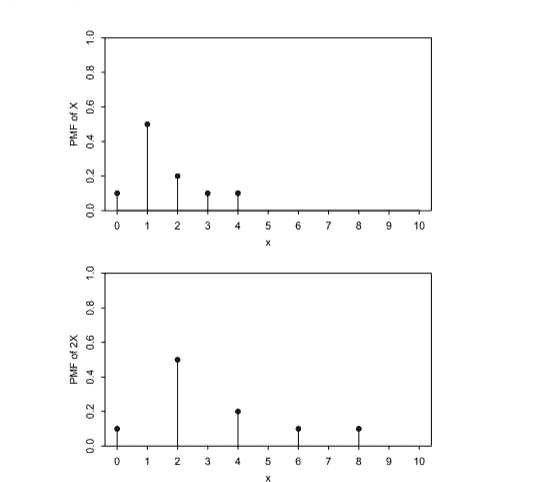

3.8.7 (i. vs. i.d.). “Independent” and “identically distributed” are two oftenconfused but completely different concepts. Random variables are independent if they provide no information about each other; they are identically distributed if they have the same PMF (or equivalently, the same CDF). Whether two r.v.s are independent has nothing to do with whether they have the same distribution. We can have r.v.s that are: independent and identically distributed, Let $X$ be the result of a die roll, and let $Y$ be the result of a second, independent die roll. Then $X$ and $Y$ are i.i.d.

- independent and not identically distributed. Let $X$ be the result of a die roll, and let $Y$ be the closing price of the Dow Jones (a stock market index) a month from now. Then $X$ and $Y$ provide no information about each other (one would fervently hope), and $X$ and $Y$ do not have the same distribution.

统计代考

$3.8$ r.v.s 的独立性

正如我们有事件独立性的概念一样,我们可以定义随机变量的独立性。直观地说,如果两个 r.v.s $X$ 和 $Y$ 是独立的,那么知道 $X$ 的值不会提供有关 $Y$ 值的信息,反之亦然。该定义形式化了这个想法。

定义 3.8.1(两个 r.v.s 的独立性)。随机变量 $X$ 和 $Y$ 被称为

如果是独立的

$$

P(X \leq x, Y \leq y)=P(X \leq x) P(Y \leq y)

$$

对于所有 $x, y \in \mathbb{R}$。

在离散情况下,这等价于条件

$$

P(X=x, Y=y)=P(X=x) P(Y=y),

$$

对于所有 $x, y$,$x$ 在 $X$ 的支持下,$y$ 在 $Y$ 的支持下。

超过两个 r.v.s 的定义是类似的。

定义 3.8.2(许多 r.v.s 的独立性)。随机变量 $X_{1}, \ldots, X_{n}$ 是独立的,如果独立,如果

$$

P\left(X_{1} \leq x_{1}, \ldots, X_{n} \leq x_{n}\right)=P\left(X_{1} \leq x_{1}\right) \ ldots P\left(X_{n} \leq x_{n}\right)

$$

对于所有 $x_{1}, \ldots, x_{n} \in \mathbb{R}$。对于无限多的 r.v.s,如果 r.v.s 的每个有限子集都是独立的,我们就说它们是独立的。

将此与 $n$ 个事件的独立性标准进行比较,$X_{1}、\ldots、X_{n}$ 的独立性只需要一个相等性似乎很奇怪,而对于事件,我们需要验证成对独立性所有 $\left(\begin{array}{l}n \ 2\end{array}\right)$ 对,所有 (\begin{array}{l } $&{3 \text { ) 三胞胎,等等。然而,在仔细检查定义后,我们看到 }} \end{array}$ 无限多的条件!如果我们甚至可以找到上述等式不成立的值 $x_{1}、\ldots、x_{n}$ 的单个列表,那么 $X_{1}、\ldots、X_{n}$ 不成立独立的。

3.8.3.如果 $X_{1}、\ldots、X_{n}$ 是独立的,那么它们是成对独立的,即对于 $i \neq j$,$X_{i}$ 独立于 $X_{j}$。证明 $X_{i}$ 和 $X_{j}$ 是独立的背后的想法是让除 $x_{i}, x_{j}$ 之外的所有 $x_{k}$ 去 $\infty$在独立性的定义中,因为我们已经知道 $X_{k}<\infty$ 是正确的(尽管需要一些工作才能完全证明限制的合理性)。但成对独立者 2 用于事件。一般的独立性,正如我们在第 1 章中看到的那样

示例 3.8.4。在掷两个公平骰子时,如果 $X$ 是第一个骰子上的数字,$Y$ 是第二个骰子上的数字,那么 $X+Y$ 不独立于 $X-Y$,因为

$0=P(X+Y=12, X-Y=1) \neq P(X+Y=12) P(X-Y=1)=\frac{1}{36} \cdot \frac{5}{36}$

知道总数是 12 告诉我们差必须是 0,所以 r.v.s 提供了关于彼此的信息。

如果 $X$ 和 $Y$ 是独立的,那么它也是正确的,例如,$X^{2}$ 独立于 $Y^{4}$,因为如果 $X^{2}$ 提供了关于 $ 的信息Y^{4}$,那么 $X$ 会给出关于 $Y$ 的信息(使用 $X^{2}$ 和 $Y^{4}$ 作为中介:$X$ 决定 $X^{2}$,这将提供有关 $Y^{4}$ 的信息,而后者又将提供有关 $Y$ 的信息)。更一般地说,我们有以下结果(我们省略了正式证明)。

定理 3.8.5(独立 r.v.s 的函数)。如果 $X$ 和 $Y$ 是独立的 r.v.s,那么 $X$ 的任何函数都独立于 $Y$ 的任何函数。

定义 3.8.6 (i.i.d.)。我们经常使用独立且具有相同分布的随机变量。我们称这样的 r.v.s 独立同分布,或 i.i.d。简而言之。

3.8.7(i. 与 i.d.)。 “独立”和“同分布”是两个经常混淆但完全不同的概念。如果随机变量不提供关于彼此的信息,则它们是独立的;如果它们具有相同的 PMF(或等效地,相同的 CDF),则它们是相同分布的。两个 r.v.s 是否独立与它们是否具有相同的分布无关。我们可以有 r.v.s 是:独立且同分布,假设 $X$ 是掷骰子的结果,而 $Y$ 是第二次独立掷骰子的结果。那么 $X$ 和 $Y$ 是 i.i.d。

- 独立且非同分布。设 $X$ 是掷骰子的结果,设 $Y$ 是道琼斯(一种股票市场指数)一个月后的收盘价。然后 $X$ 和 $Y$ 没有提供关于彼此的信息(人们热切希望),并且 $X$ 和 $Y$ 没有相同的分布。

R语言代写

统计代写|SAMPLE SPACES AND PEBBLE WORLD stat 代写 请认准UprivateTA™. UprivateTA™为您的留学生涯保驾护航。