统计代写| Law of the unconscious statistician (LOTUS) stat代写

统计代考

4.5 Law of the unconscious statistician (LOTUS)

As we saw in the St. Petersburg paradox, $E(g(X))$ does not equal $g(E(X))$ in general if $g$ is not linear. So how do we correctly calculate $E(g(X))$ ? Since $g(X)$ is an r.v., one way is to first find the distribution of $g(X)$ and then use the definition of expectation. Perhaps surprisingly, it turns out that it is possible to find $E(g(X))$ directly using the distribution of $X$, without first having to find the distribution of $g(X)$. This is done using the law of the unconscious statistician (LOTUS).

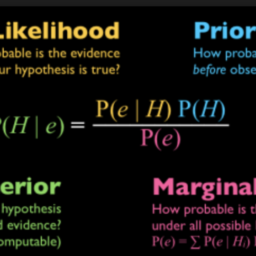

Theorem 4.5.1 (LOTUS). If $X$ is a discrete r.v. and $g$ is a function from $\mathbb{R}$ to $\mathbb{R}$, then

$$

E(g(X))=\sum_{x} g(x) P(X=x)

$$

where the sum is taken over all possible values of $X$.

This means that we can get the expected value of $g(X)$ knowing only $P(X=x)$, the PMF of $X$; we don’t need to know the PMF of $g(X)$. The name comes from the fact that in going from $E(X)$ to $E(g(X))$ it is tempting just to change $x$ to $g(x)$ in the definition, which can be done very easily and mechanically, perhaps in a state of unconsciousness. $\mathrm{On}$ second thought, it may sound too good to be true that finding the distribution of $g(X)$ is not needed for this calculation, but LOTUS says it is true.

Expectation

171

Before proving LOTUS in general, let’s see why it is true in some special cases. Let $X$ have support $0,1,2, \ldots$ with probabilities $p_{0}, p_{1}, p_{2}, \ldots$, so the PMF is $P(X=n)=$ $p_{n}$. Then $X^{3}$ has support $0^{3}, 1^{3}, 2^{3}, \ldots$ with probabilities $p_{0}, p_{1}, p_{2}, \ldots$, so

$$

\begin{aligned}

&E(X)=\sum_{n=0}^{\infty} n p_{n} \

&E\left(X^{3}\right)=\sum_{n=0}^{\infty} n^{3} p_{n}

\end{aligned}

$$

As claimed by LOTUS, to edit the expression for $E(X)$ into an expression for $E\left(X^{3}\right)$, we can just change the $n$ in front of the $p_{n}$ to an $n^{3}$. This was an easy example since the function $g(x)=x^{3}$ is one-to-one. But LOTUS holds much more generally. The key insight needed for the proof of LOTUS for general $g$ is the same as the one we used for the proof of linearity: the expectation of $g(X)$ can be written in ungrouped form as

$$

E(g(X))=\sum_{s} g(X(s)) P({s})

$$

where the sum is over all the pebbles in the sample space, but we can also group the pebbles into super-pebbles according to the value that $X$ assigns to them. Within the super-pebble $X=x, g(X)$ always takes on the value $g(x)$. Therefore,

$$

\begin{aligned}

E(g(X)) &=\sum_{s} g(X(s)) P({s}) \

&=\sum_{x} \sum_{s: X(s)=x} g(X(s)) P({s}) \

&=\sum_{x} g(x) \sum_{s: X(s)=x} P({s}) \

&=\sum_{x} g(x) P(X=x) .

\end{aligned}

$$

In the last step, we used the fact that $\sum_{s: X(s)=x} P({s})$ is the weight of the superpebble $X=x$.

统计代考

4.5 无意识统计学家定律(LOTUS)

正如我们在圣彼得堡悖论中看到的,如果 $g$ 不是线性的,则 $E(g(X))$ 通常不等于 $g(E(X))$。那么我们如何正确计算 $E(g(X))$ 呢?由于 $g(X)$ 是一个 r.v.,一种方法是首先找到 $g(X)$ 的分布,然后使用期望的定义。也许令人惊讶的是,可以直接使用 $X$ 的分布来找到 $E(g(X))$,而无需先找到 $g(X)$ 的分布。这是使用无意识统计学家定律 (LOTUS) 完成的。

定理 4.5.1(莲花)。如果 $X$ 是一个离散的 r.v. $g$ 是一个从 $\mathbb{R}$ 到 $\mathbb{R}$ 的函数,那么

$$

E(g(X))=\sum_{x} g(x) P(X=x)

$$

其中总和取自 $X$ 的所有可能值。

这意味着我们只知道$P(X=x)$,即$X$的PMF,就可以得到$g(X)$的期望值;我们不需要知道 $g(X)$ 的 PMF。这个名字来源于这样一个事实,即在从 $E(X)$ 到 $E(g(X))$ 时,只需在定义中将 $x$ 更改为 $g(x)$ 就很诱人,这可以做到非常容易和机械地,也许是在一种无意识的状态中。 $\mathrm{On}$ 再想一想,这个计算不需要找到 $g(X)$ 的分布这听起来好得令人难以置信,但 LOTUS 说这是真的。

期待

171

在一般地证明 LOTUS 之前,让我们看看为什么在某些特殊情况下它是正确的。设 $X$ 有支持 $0,1,2, \ldots$ 的概率为 $p_{0}, p_{1}, p_{2}, \ldots$,所以 PMF 是 $P(X=n)=$ $p_{n}$。那么 $X^{3}$ 支持 $0^{3}, 1^{3}, 2^{3}, \ldots$ 概率为 $p_{0}, p_{1}, p_{2}, \ ldots$,所以

$$

\开始{对齐}

&E(X)=\sum_{n=0}^{\infty} n p_{n} \

&E\left(X^{3}\right)=\sum_{n=0}^{\infty} n^{3} p_{n}

\end{对齐}

$$

正如 LOTUS 所声称的,要将 $E(X)$ 的表达式编辑为 $E\left(X^{3}\right)$ 的表达式,我们只需更改 $p_{ 前面的 $n$ n}$ 到 $n^{3}$。这是一个简单的例子,因为函数 $g(x)=x^{3}$ 是一对一的。但 LOTUS 更普遍。对一般 $g$ 的 LOTUS 证明所需的关键见解与我们用于线性证明的关键见解相同:$g(X)$ 的期望可以以未分组的形式写成

$$

E(g(X))=\sum_{s} g(X(s)) P({s})

$$

其中总和是样本空间中所有卵石的总和,但我们也可以根据 $X$ 分配给它们的值将卵石分组为超级卵石。在超级卵石 $X=x 内,g(X)$ 总是取值 $g(x)$。所以,

$$

\开始{对齐}

E(g(X)) &=\sum_{s} g(X(s)) P({s}) \

&=\sum_{x} \sum_{s: X(s)=x} g(X(s)) P({s}) \

&=\sum_{x} g(x) \sum_{s: X(s)=x} P({s}) \

&=\sum_{x} g(x) P(X=x) 。

\end{对齐}

$$

在最后一步中,我们使用了 $\sum_{s: X(s)=x} P({s})$ 是超级卵石 $X=x$ 的权重这一事实。

R语言代写

统计代写|SAMPLE SPACES AND PEBBLE WORLD stat 代写 请认准UprivateTA™. UprivateTA™为您的留学生涯保驾护航。