如果你也在 怎样统计计算Statistical Computing这个学科遇到相关的难题,请随时右上角联系我们的24/7代写客服。统计计算Statistical Computing是统计学和计算机科学之间的纽带。它意味着通过使用计算方法来实现的统计方法。它是统计学的数学科学所特有的计算科学(或科学计算)的领域。这一领域也在迅速发展,导致人们呼吁应将更广泛的计算概念作为普通统计教育的一部分。与传统统计学一样,其目标是将原始数据转化为知识,[2]但重点在于计算机密集型统计方法,例如具有非常大的样本量和非同质数据集的情况。

许多统计建模和数据分析技术可能难以掌握和应用,因此往往需要使用计算机软件来帮助实施大型数据集并获得有用的结果。S-Plus是公认的最强大和最灵活的统计软件包之一,它使用户能够应用许多统计方法,从简单的回归到时间序列或多变量分析。该文本广泛涵盖了许多基本的和更高级的统计方法,集中于图形检查,并具有逐步说明的特点,以帮助非统计学家充分理解方法。

my-assignmentexpert™统计计算Statistical Computing作业代写,免费提交作业要求, 满意后付款,成绩80\%以下全额退款,安全省心无顾虑。专业硕 博写手团队,所有订单可靠准时,保证 100% 原创。my-assignmentexpert™, 最高质量的统计计算Statistical Computing作业代写,服务覆盖北美、欧洲、澳洲等 国家。 在代写价格方面,考虑到同学们的经济条件,在保障代写质量的前提下,我们为客户提供最合理的价格。 由于统计Statistics作业种类很多,同时其中的大部分作业在字数上都没有具体要求,因此统计计算Statistical Computing作业代写的价格不固定。通常在经济学专家查看完作业要求之后会给出报价。作业难度和截止日期对价格也有很大的影响。

想知道您作业确定的价格吗? 免费下单以相关学科的专家能了解具体的要求之后在1-3个小时就提出价格。专家的 报价比上列的价格能便宜好几倍。

my-assignmentexpert™ 为您的留学生涯保驾护航 在统计计算Statistical Computing作业代写方面已经树立了自己的口碑, 保证靠谱, 高质且原创的统计计算Statistical Computing代写服务。我们的专家在统计计算Statistical Computing代写方面经验极为丰富,各种统计计算Statistical Computing相关的作业也就用不着 说。

我们提供的统计计算Statistical Computing及其相关学科的代写,服务范围广, 其中包括但不限于:

- 随机微积分 Stochastic calculus

- 随机分析 Stochastic analysis

- 随机控制理论 Stochastic control theory

- 微观经济学 Microeconomics

- 数量经济学 Quantitative Economics

- 宏观经济学 Macroeconomics

- 经济统计学 Economic Statistics

- 经济学理论 Economic Theory

- 计量经济学 Econometrics

统计代写

数学代写|统计计算作业代写Statistical Computing代考|Bootstrap estimates

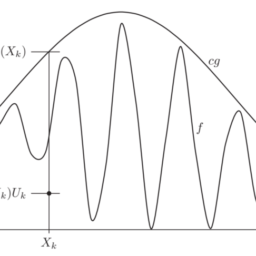

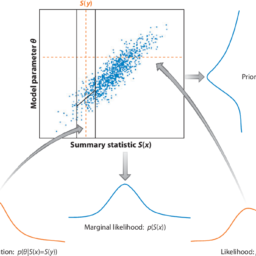

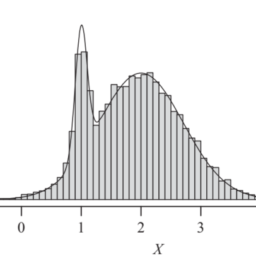

The basis of all resampling methods is to replace the distribution given by a model with the ’empirical distribution’ of the given data, as described in the following definition.

Definition 5.7 Given a sequence $x=\left(x_{1}, x_{2}, \ldots, x_{M}\right)$, the distribution of $X^{}=x_{K}$, where the index $K$ is random and uniformly distributed on the set ${1,2, \ldots, M}$, is called the empirical distribution of the $x_{i}$. In this chapter we denote the empirical distribution of $x$ by $P_{x}^{}$.

In the definition, the vector $x$ is assumed to be fixed. The randomness in $X^{}$ stems from the choice of a random element, with index $K \sim \mathcal{U}{1,, 2, \ldots, M}$, of this fixed sequence. Computational methods which are based on the idea of approximating an unknown ‘true’ distribution by an empirical distribution are called bootstrap methods. Assume that $X^{}$ is distributed according to the empirical distribution $P_{x}^{}$. Then we have $$ P\left(X^{}=a\right)=\frac{1}{M} \sum_{i=1}^{M} \mathbb{1}{{a}}\left(x{i}\right)

$$

that is under the empirical distribution, the probability that $X^{}$ equals $a$ is given by the relative frequency of occurrences of $a$ in the given data. Similarly, we have the relations $$ P\left(X^{} \in A\right)=\frac{1}{M} \sum_{i=1}^{M} \mathbb{1}{A}\left(x{i}\right)

$$

and

$$

\begin{aligned}

\mathbb{E}\left(f\left(X^{}\right)\right) &=\sum_{a \in\left{x_{1}, \ldots, x_{M}\right}} f(a) P\left(X^{}=a\right) \

&=\sum_{a \in\left{x_{1}, \ldots, x_{M}\right}} f(a) \frac{1}{M} \sum_{i=1}^{M} \mathbb{1}{{a}}\left(x{i}\right)=\frac{1}{M} \sum_{i=1}^{M} f\left(x_{i}\right)

\end{aligned}

$$

Some care is needed when verifying this relation: the sums where the index $a$ runs over the set $\left{x_{1}, \ldots, x_{M}\right}$ have only one term for each element of the set, even if the corresponding value occurs repeatedly in the given data.

数学代写|统计计算作业代写STATISTICAL COMPUTING代考|Applications to statistical inference

The main application of the bootstrap method in statistical inference is to quantify the accuracy of parameter estimates.

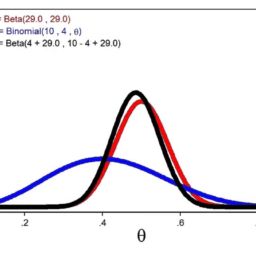

In this section, we will consider parameters as a function of the corresponding distribution: if $\theta$ is a parameter, for example the mean or the variance, then we write $\theta(P)$ for the corresponding parameter. In statistics, there are many ways of constructing estimators for a parameter $\theta$. One general method for constructing parameter estimators, the plug-in principle, is given in the following definition.

Definition $5.12$ Consider an estimator $\hat{\theta}{n}=\hat{\theta}{n}\left(X_{1}, \ldots, X_{n}\right)$ for a parameter $\theta(P)$. The estimator $\hat{\theta}{n}$ satisfies the plug-in principle, if it satisfies the relation $$ \hat{\theta}{n}\left(x_{1}, \ldots, x_{n}\right)=\theta\left(P_{x}^{}\right), $$ for all $x=\left(x_{1}, \ldots, x_{n}\right)$, where $P_{x}^{}$ is the empirical distribution of $x$. In this case, $\hat{\theta}_{n}$ is called the plug-in estimator for $\theta$.

Since the idea of bootstrap methods is to approximate the distribution $P$ by the empirical distribution $P_{x}^{*}$, plug-in estimators are particularly useful in conjunction with bootstrap methods.

数学代写|统计计算作业代写STATISTICAL COMPUTING代考|BOOTSTRAP ESTIMATES

所有重采样方法的基础是将模型给出的分布替换为给定数据的“经验分布”,如以下定义中所述。

定义 5.7 给定一个序列 $x=\left(x_{1}, x_{2}, \ldots, x_{M}\right)$, the distribution of $X^{}=x_{K}$, where the index $K$ is random and uniformly distributed on the set ${1,2, \ldots, M}$, is called the empirical distribution of the $x_{i}$. In this chapter we denote the empirical distribution of $x$ by $P_{x}^{}$.。

在定义中,向量X假设是固定的。 $x$ is assumed to be fixed. The randomness in $X^{}$ stems from the choice of a random element, with index $K \sim \mathcal{U}{1,, 2, \ldots, M}$, of this fixed sequence. Computational methods which are based on the idea of approximating an unknown ‘true’ distribution by an empirical distribution are called bootstrap methods. Assume that $X^{}$ is distributed according to the empirical distribution $P_{x}^{}$. Then we have $$ P\left(X^{}=a\right)=\frac{1}{M} \sum_{i=1}^{M} \mathbb{1}{{a}}\left(x{i}\right)

$$

即在经验分布下,

$$

P\left(X^{} \in A\right)=\frac{1}{M} \sum_{i=1}^{M} \mathbb{1}{A}\left(x{i}\right)

$$

and

$$

\begin{aligned}

\mathbb{E}\left(f\left(X^{}\right)\right) &=\sum_{a \in\left{x_{1}, \ldots, x_{M}\right}} f(a) P\left(X^{*}=a\right) \

&=\sum_{a \in\left{x_{1}, \ldots, x_{M}\right}} f(a) \frac{1}{M} \sum_{i=1}^{M} \mathbb{1}{{a}}\left(x{i}\right)=\frac{1}{M} \sum_{i=1}^{M} f\left(x_{i}\right) .

\end{aligned}

$$

验证此关系时需要注意:索引处的总和一种越过集合\left{x_{1}, \ldots, x_{M}\right}\left{x_{1}, \ldots, x_{M}\right}集合的每个元素只有一个术语,即使相应的值在给定数据中重复出现。

数学代写|统计计算作业代写STATISTICAL COMPUTING代考|APPLICATIONS TO STATISTICAL INFERENCE

bootstrap 方法在统计推断中的主要应用是量化参数估计的准确性。

在本节中,我们将参数视为相应分布的函数:如果θ是一个参数,例如均值或方差,那么我们写θ(磷)对应的参数。在统计学中,有许多方法可以为参数构造估计量θ. 构造参数估计器的一种通用方法,即插件原理,在以下定义中给出。

定义5.12考虑一个估计器

$\hat{\theta}{n}=\hat{\theta}{n}\left(X_{1}, \ldots, X_{n}\right)$ for a parameter $\theta(P)$. The estimator $\hat{\theta}{n}$ satisfies the plug-in principle, if it satisfies the relation $$ \hat{\theta}{n}\left(x_{1}, \ldots, x_{n}\right)=\theta\left(P_{x}^{}\right) $$ for all $x=\left(x_{1}, \ldots, x_{n}\right)$, where $P_{x}^{}$ is the empirical distribution of $x$. In this case, $\hat{\theta}_{n}$ is called the plug-in estimator for $\theta$.

由于引导方法的思想是近似分布磷通过经验分布磷X∗,插件估计器与引导方法结合使用特别有用。

计量经济学代写请认准my-assignmentexpert™ Economics 经济学作业代写

微观经济学代写请认准my-assignmentexpert™ Economics 经济学作业代写

宏观经济学代写请认准my-assignmentexpert™ Economics 经济学作业代写