MY-ASSIGNMENTEXPERT™可以为您提供stanford EE276/Stats376a: Information Theory信息论课程的代写代考和辅导服务!

这是斯坦福大学信息论课程的代写成功案例。

EE276/Stats376a课程简介

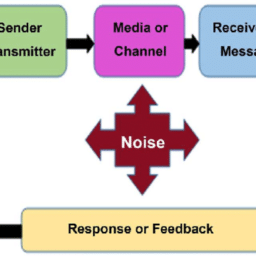

Information theory was invented by Claude E. Shannon in 1948 as a mathematical theory for communication but has subsequently found a broad range of applications. The first two-thirds of the course cover the core concepts of information theory, including entropy and mutual information, and how they emerge as fundamental limits of data compression and communication. The last one-third of the course focuses on applications of information theory.

Prerequisites

We will follow the following outline

0. Overview

1. Basic Concepts (1 week)

- Entropy, relative entropy, mutual information.

2. Entropy and data compression (2 weeks)

- Source entropy rate. Typical sequences and asymptotic equipartition property.

- Source coding theorem. Huffman and arithmetic codes.

- Universal compression and distribution estimation.

3. Mutual information, capacity and communication (4 weeks)

- Channel capacity. Fano’s inequality and data processing theorem.

- Jointly typical sequences. Noisy channel coding theorem.

- Achieving capacity efficiently: polar codes.

- Gaussian channels, continuous random variables and differential entropies.

- Optimality of source-channel separation.

4. Topics in Information Theory (2 weeks)

- Slepian-wolf distributed compression

- Information theoretic cryptography and common randomness

- Network coding

EE276/Stats376a: Information Theory HELP(EXAM HELP, ONLINE TUTOR)

Homework must be turned in online via Gradescope no later than $5 \mathrm{pm}$ Friday, April 14. No late homework accepted.

The first four problems correspond to the problems 2.1, 2.3, 2.8 and 2.12 in Cover \& Thomas.

Coin flips. A fair coin is flipped until the first head occurs. Let $X$ denote the number of tlips required.

(a) Find the entropy $H(X)$ in bits. The following expressions may be useful:

$$

\sum_{n=0}^{\infty} r^n=\frac{1}{1-r}, \quad \sum_{n=0}^{\infty} n r^n=\frac{r}{(1-r)^2} .

$$

(b) A random variable $X$ is drawn according to this distribution. Find an “efficient” sequence of yes-no questions of the form, “Is $X$ contained in the set $S$ ?” Compare $H(X)$ to the expected number of questions required to determine $X$.

Minimum entropy. What is the minimum value of $H\left(p_1, \ldots, p_n\right)=H(\mathbf{p})$ as $\mathbf{p}$ ranges over the set of $n$-dimensional probability vectors? Find all p’s which achieve this minimum.

Drawing with and without replacement. An urn contains $r$ red, $w$ white, and $b$ black balls. Which has higher entropy, drawing $k \geq 2$ balls from the urn with replacement or without replacement? Set it up and show why. (There is both a hard way and a relatively simple way to do this.)

Example of joint entropy. Let $p(x, y)$ be given by

Find

(a) $H(X), H(Y)$.

(b) $H(X \mid Y), H(Y \mid X)$.

(c) $H(X, Y)$.

(d) $H(Y)-H(Y \mid X)$.

(e) $I(X ; Y)$.

(f) Draw a Venn diagram for the quantities in (a) through (e).

(a) Are quantities like $E(X)$ and $H(X)$ functions of the random variable $X$ or functions of $X$ ‘s probability mass function or function of something else? Explain. Are the notations used to denote these quantities ideal? If not, suggest alternative notations.

(b) Define what it means by $H(X)$ being label invariant. Make your definition in terms of the original notation $H(X)$ and also in terms of your alternative notation in part (a) if you gave one.

(c) Suppose $X$ takes on three values $0,1,2$ with probabilities $p_0, p_1, p_2$ respectively. Derive all the symmetries in the entropy as a function of these three probabilities using the label invariance of entropy.

(d) Generalize part (c) to the case when $X$ takes on $n$ values.

MY-ASSIGNMENTEXPERT™可以为您提供STANFORD EE276/STATS376A: INFORMATION THEORY信息论课程的代写代考和辅导服务!