MY-ASSIGNMENTEXPERT™可以为您提供sydney STAT3023 Statistical Inference统计推断的代写代考和辅导服务!

这是悉尼大学统计推断课程的代写成功案例。

STAT3023 课程简介

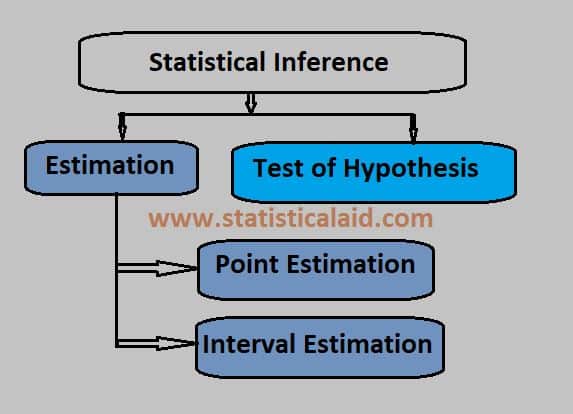

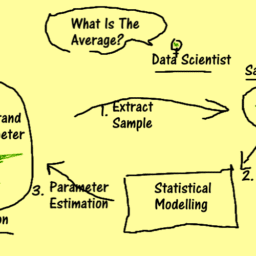

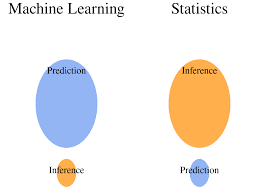

In today’s data-rich world more and more people from diverse fields are needing to perform statistical analyses and indeed more and more tools for doing so are becoming available; it is relatively easy to point and click and obtain some statistical analysis of your data. But how do you know if any particular analysis is indeed appropriate? Is there another procedure or workflow which would be more suitable? Is there such a thing as the best possible approach in a given situation? All of these questions (and more) are addressed in this unit. You will study the foundational core of modern statistical inference, including classical and cutting-edge theory and methods of mathematical statistics with a particular focus on various notions of optimality. The first part of the unit covers various aspects of distribution theory which are necessary for the second part which deals with optimal procedures in estimation and testing. The framework of statistical decision theory is used to unify many of the concepts. You will apply the methods learnt to real-world problems in laboratory sessions. By completing this unit you will develop the necessary skills to confidently choose the best statistical analysis to use in many situations.

Prerequisites

At the completion of this unit, you should be able to:

- LO1. deduce the (limiting) distribution of sums of random variables using moment-generating functions

- LO2. derive the distribution of a transformation of two (or more) continuous random variables

- LO3. derive marginal and conditional distributions associated with certain multivariate distributions

- LO4. classify many common distributions as belonging to an exponential family

- LO5. derive and implement maximum likelihood methods in various estimation and testing problems

- LO6. formulate and solve various inferential problems in a decision theory framework

- LO7. derive and apply optimal procedures in various problems, including Bayes rules, minimax rules, minimum variance unbiased estimators and most powerful tests.

STAT3023 Statistical Inference HELP(EXAM HELP, ONLINE TUTOR)

Consider a linear regression model

$$

y_i=\beta_0+\sum_{j=1}^K \beta_j x_{i j}+\epsilon_i, \quad i=1, \ldots, n,

$$

where $\epsilon_i$ ‘s are independent and identically distributed (i.i.d.) with mean 0 and variance $\sigma^2$. Let $\boldsymbol{\beta}=\left(\beta_0, \beta_1, \ldots, \beta_K\right)^{\prime}$. We are interesting in estimating $\boldsymbol{\beta}^{\prime} \boldsymbol{\beta} / \sigma^2$ (which can be regarded as a signal-to-noise ratio), and a natural way to estimate it is

$$

\frac{\hat{\boldsymbol{\beta}}^{\prime} \hat{\boldsymbol{\beta}}}{\hat{\sigma}^2}

$$

where $\hat{\boldsymbol{\beta}}=\left(\mathbf{X}^{\prime} \mathbf{X}\right)^{-1} \mathbf{X}^{\prime} \mathbf{y}$

$$

\mathbf{X}=\left(\begin{array}{cccc}

1 & x_{11} & \cdots & x_{1 K} \

\vdots & \vdots & & \vdots \

1 & x_{n 1} & \cdots & x_{n K}

\end{array}\right)_{n \times(K+1)}

$$

$\mathbf{y}=\left(y_1, \ldots, y_n\right)^{\prime}, \hat{\sigma}^2=(n-K-1)^{-1} \mathbf{y}^{\prime}\left(\mathbf{I}_n-\mathbf{M}\right) \mathbf{y}, \mathbf{I}_n$ is a $n \times n$ identity matrix and $\mathbf{M}=\mathbf{X}\left(\mathbf{X}^{\prime} \mathbf{X}\right)^{-1} \mathbf{X}^{\prime}$ is the orthogonal projection matrix onto $C(\mathbf{X})$, the column space of $\mathbf{X}$.

(1) Assuming $\epsilon_1$ follows a normal distribution, compute $E\left(\hat{\boldsymbol{\beta}}^{\prime} \hat{\boldsymbol{\beta}} / \hat{\sigma}^2\right)$. Is $\hat{\boldsymbol{\beta}}^{\prime} \hat{\boldsymbol{\beta}} / \hat{\sigma}^2$ an unbiased estimator of $\boldsymbol{\beta}^{\prime} \boldsymbol{\beta} / \sigma^2$ ? If no, please find an unbiased estimator for $\boldsymbol{\beta}^{\prime} \boldsymbol{\beta} / \sigma^2$.

(2) Does $\hat{\boldsymbol{\beta}}^{\prime} \hat{\boldsymbol{\beta}} / \hat{\sigma}^2$ converge in probability to $\boldsymbol{\beta}^{\prime} \boldsymbol{\beta} / \sigma^2$ when there is no normality assumption on $\epsilon_1$ ? Why?

Consider model $(*)$ with $K=1$ and

$$

x_{i 1}= \begin{cases}1, & \text { if } i=1 \ i^{-1 / 2}, & \text { if } i=2, \ldots, n .\end{cases}

$$

Does $\hat{\boldsymbol{\beta}}$ converge in probability to $\boldsymbol{\beta}$ ? Why?

Consider model $()$ without normality assumption on the noise.

(1) Find an asymptotic level $5 \%$ test of $H_0: \beta_0 \beta_1+\beta_1^2=d$ versus $H_a: \sim H_0$.

(2) Let $\mathbf{x}^$ be a $(K+1)$-dimensional known vector. Assuming $\epsilon_1$ follows a normal distribution, find a $95 \%$ confidence interval for $\mathrm{x}^{* \prime} \boldsymbol{\beta}$.

(3) Construct a $95 \%$ confidence interval for $\left(\mathrm{x}^{* \prime} \boldsymbol{\beta}\right)^2$ when the normality assumption of the noise holds true. If you can not do that, please find an asymptotic $95 \%$ confidence interval for $\left(\mathbf{x}^{* \prime} \boldsymbol{\beta}\right)^2$.

Assume $\mathbf{X}_n \stackrel{d}{\rightarrow} \mathbf{Z}$ and $\mathbf{A}_n \rightarrow \mathbf{A}$ where $\mathbf{A}_n$ ‘s and $\mathbf{A}$ are non-random positive definite matrices. Show that

$$

\mathbf{X}_n^{\prime} \mathbf{A}_n \mathbf{X}_n \stackrel{d}{\rightarrow} \mathbf{Z}^{\prime} \mathbf{A} \mathbf{Z}

$$

If $X_n \sim \chi^2(\sqrt{n})$ and $y_n \sim \chi^2(n)$, prove that

$$

n^{1 / 4}\left(\frac{X_n / \sqrt{n}}{y_n / n}-1\right) \stackrel{d}{\rightarrow} \mathrm{N}(0,2) .

$$

MY-ASSIGNMENTEXPERT™可以为您提供CATALOG.USF.EDU EEL6029 STATISTICAL INFERENCE统计推断的代写代考和辅导服务!