Read each problem carefully. Show all your work for each problem. Discussion with your peers is allowed (and encouraged), however, each person must hand in their own written solutions. You must also write at the top of the first page the names of anyone you may have worked with.

$$

\text { Minimize } f(\vec{x}):=\left\|\vec{a}^{T} \vec{x}-b\right\|^{2}

$$

where $\vec{a}=(1,2)^{T}$ and $b=3$.

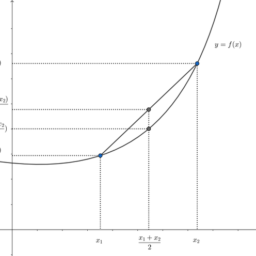

The problem $f(\vec{x})$ is obviously convex, however has a degenerate sub-space of minimizers. This problem investigates some different ways of extracting out a unique minimizer to $f(\vec{x})$. Problems where $f(\vec{x})$ has multiple minimizers, or a large number of almost optimal values often occurs in practice (for instance, when you introduce more fitting parameters than you have for your data).

1. First, characterize all minimizers of $f(\vec{x})$. Call this set $S$.

2. Find the minimizer $\vec{x}_{P I}$ given by the pseudoinverse. That is, think of the vector $\vec{a}^{T}$ as a matrix $\boldsymbol{A}=\left[\vec{a}^{T}\right]$ with one row. Then $\vec{x}_{P I}=\boldsymbol{A}^{+} \vec{b}$ where $\boldsymbol{A}^{+}$ is the pseudoinverse.

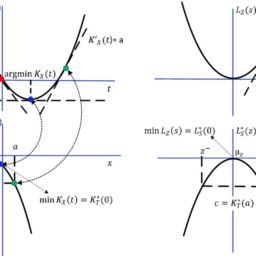

3. Now consider a different regularization. Find $\vec{x}^{*}$ that solves the following minimization:

Minimize $\|\vec{x}\|^{2}$ Subject to $\vec{x} \in S$

Does this $\vec{x}^{*}$ agree with the pseudoinverse?

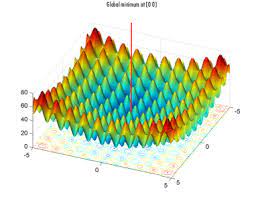

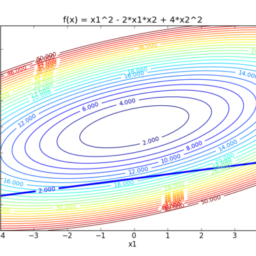

4. A popular approach to regularize a problem is to add a penalty term to $f(\vec{x})$. Consider $\left(P_{\lambda}\right) \quad$ Minimize $f(\vec{x})+\lambda\|\vec{x}\|_{4}^{4}$

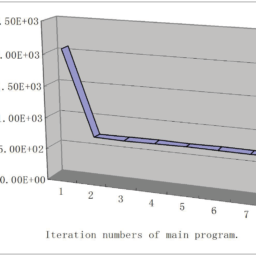

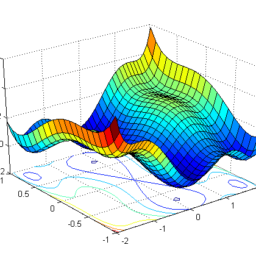

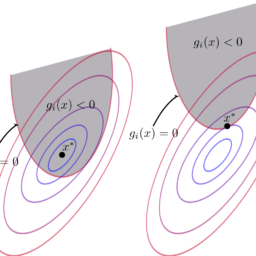

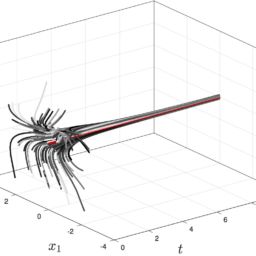

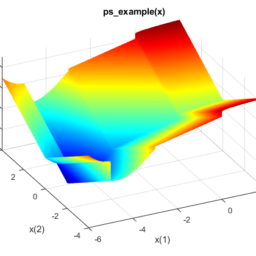

Here $0 \leq \lambda<\infty$ is a penalty term, while $\|\vec{x}\|_{4}^{4}=x_{1}^{4}+x_{2}^{4}$ is the 4 th power of the $\ell_{4}$ -norm (and can easily be verified to be strictly convex on any convex set not containing the origin (0,0)) . The total problem is then the sum of two convex functions (hence convex). Denote the solution to $\left(P_{\lambda}\right)$ as $\vec{x}_{\lambda}$ Write a Matlab code that solves for $\vec{x}_{\lambda}$. Provide a plot that contains the following key aspects:

i. The locus of optimum points $\vec{x}_{\lambda}$, for different $\lambda$.

ii. The set of points $S$ (that optimize $f(\vec{x})$.

iii. The optimal point $\vec{x}_{P I}$. iv. The boundary of the unit ball $x_{1}^{4}+x_{2}^{4}=1$ in the $\ell_{4}$ norm (which gives some intuition on which directions are promoted and penalized by the regularization term).

Problem 2: Normal cones. This problem asks you to work out the normal cone for a couple different convex sets.

1. A solid pyramid is written as the convex hull of it’s corners

$$

V=\operatorname{conv}\{(0,0,0),(1,0,0),(0,1,0),(0,0,1)\} .

$$

Parameterize the normal cone $\mathcal{N}_{V}(\bar{x})$ where $\bar{x}=(0,0,1)$.

凸优化代写写请认准UpriviateTA. UpriviateTA为您的留学生涯保驾护航。

更多内容请参阅另外一份复分析代写.