物理代写| Basis Vectors 相对论代考

物理代写

$3.2$ Basis Vectors

First we will describe a $n$ dimensional flat space. Any point in this space can be specified by $n$ real numbers called its coordinates denoted by $x^{i}, i=1,2, \ldots, n$, where we have used a superscript to label the coordinates. Note that it is not an exponent and so any such confusion should be avoided. Strictly speaking a curvilinear coordinate system that is not Cartesian does not cover the entire manifold but a Cartesian system does. At this point, we will call a space flat if it can be covered fully by $x^{i}$ and the distance between adjacent points labelled by $x^{i}$ and $x^{i}+d x^{i}$ is given by:

$$

d s^{2}=\eta_{i k} d x^{i} d x^{k}

$$

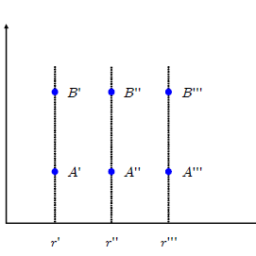

where $\eta_{i k}$ is the metric tensor which is symmetric, namely, $\eta_{i k}=\eta_{k i}$ and the $\eta_{i k}$ are constants. This we will see is fully equivalent to the description in Chapter 4 of a flat space in terms of the Riemann tensor, in which the Riemann tensor vanishes identically throughout the space (see Exercise $4.3$ in Chapter 4). By a principal axis transformation and rescaling, we can make the $\eta_{i k}$ diagonal and each diagonal entry equals $\pm 1$. The spacetime of SR is an example of a 4 dimensional flat space. If the diagonal entries are all $+1$, then $d s^{2}$ is a sum of squares which is in fact the repeated application of the Pythagoras theorem. Such a space is called Euclidean and the metric is called positive definite. The SR spacetime has an indefinite metric because here the terms in the metric $\eta_{i k}$, in the convention we have adopted, there is one positive sign and three negative signs. We will in general consider any curvilinear coordinate system which may not necessarily span the full space. This does not hamper us in any way because we will be only doing local analysis. As remarked before, in flat space it is possible to specify a point $\mathbf{x}$ uniquely, namely the position vector, using $n$ coordinates $x^{i}, i=1, \ldots, n$. One can construct “imaginary grid lines” for mental visualisation of the space coordinates. For a given $k$, along the $x^{k}$ grid line only the coordinate $x^{k}$ varies, while others are kept constant. One can also imagine $n-1$ dimensional hypersurfaces which slice the space, such that on each slice only one coordinate is held constant. Surfaces for which a given coordinate is kept constant should not intersect, as that will make a point in space to have two values of the same coordinate, hence not a valid coordinate system.

In order to make the discussion simple and familiar we will consider a positive definite metric. The results are virtually similar and easily generalised to an indefinite metric. To represent a vector field $\mathbf{V}(\mathbf{x})$ in this space in terms of its components we

40

3 Tensor Algebra

need to define $n$ basis vectors at each point in space. Let $\mathbf{x}$ be the position vector depending on $n$ curvilinear coordinates $x^{i}$, that is, $\mathbf{x}\left(x^{i}\right)$. The $x^{i}$ form a grid of coordinate curves covering the space. The basis vectors can be chosen in two natural ways:

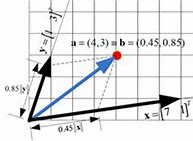

- Along the grid lines: if the $i^{\text {th }}$ coordinate is changed from $x^{i}$ to $x^{i}+\mathrm{d} x^{i}$, the direction in which the position vector $\mathbf{x}$ changes (which is along the grid lines) could be taken as the direction of the $i^{\text {th }}$ basis vector $\mathbf{e}{i}$ at $\mathbf{x}$. That is, $$ \mathbf{e}{i}:=\frac{\partial \mathbf{x}}{\partial x^{i}}

$$

Therefore geometrically, each $\mathbf{e}_{i}$ is a tangent to the coordinate curve $x^{i}$. - Normal to the slicing surfaces: the gradient to the surface of constant $x^{i}$ at $\mathbf{x}$ is normal to the surface. Here the basis vectors would be

Since we are at a given point $\mathbf{x}{\mathbf{0}}$, we leave out the position dependence explicitly. But the above equation is true at each point of the defined region. Note also the superscript and subscript on the components of the vector $\mathbf{V}$. We will discuss this point in the next section. In an orthogonal curvilinear coordinate system, the slicing surfaces would meet at right angles and one could show that the above two sets of basis vectors become co-aligned $\left(\mathbf{e}{i} \propto \mathbf{e}^{i}\right)$. However, in general this is not true. In a general curvilinear coordinate, the orthogonality of the basis vectors does not hold. However, the basis vectors, $\mathbf{e}^{i}$ and $\mathbf{e}{i}$ defined in the above manner, form a reciprocal system of vectors, such that, $$ \mathbf{e}^{i} \cdot \mathbf{e}{j}=\delta_{j}^{i}

$$

where $3.2$ Basis Vectors $\delta_{j}^{i}:=\left{\begin{array}{l}1 \text { if } i=j \ 0 \text { otherwise }\end{array}\right.$

is the Kronecker Delta function. The concept of a non-orthogonal curvilinear coordinate system and how the basis vectors form a reciprocal system of vectors is made clear with the specific example of a two-dimensional oblique coordinate system on a plane in Sect. 3.7. Note, however, that this example is for a coordinate system, that can cover the whole manifold, which is simpler to understand. In general, a single coordinate system may not even suffice to cover the whole space.

41

The above equations can be used to extract the components along a given unit vector. Note that we can talk of unit vectors and dot products because we are in Euclidean space on which the usual metric is defined. The $i^{\text {th }}$ component of $\mathbf{V}$ along the basis vector $\mathbf{e}{i}$ and $\mathrm{e}^{i}$ can thus be obtained by projecting the vector on the reciprocal vector. $V{i}=\mathbf{V} \cdot \mathbf{e}{i} .$ Again, this is very different from the special case of orthogonal coordinates. Derivation 1 Show that the two sets of basis vectors $\mathrm{e}{i}$ and $\mathrm{e}^{i}$ form a reciprocal system of vectors (Spiegel (1959)).

Using the chain rule of partial differentiation one can write

$$

\mathrm{d} \mathbf{x}=\sum_{i=1}^{n} \frac{\partial \mathbf{x}}{\partial x^{i}} \mathrm{~d} x^{i}=\sum_{i=1}^{n} \mathbf{e}{i} \mathrm{~d} x^{i} $$ Taking dot product of each side with $\mathrm{e}^{j}:=\nabla x^{j}$, one gets $$ \nabla x^{j} \cdot \mathrm{d} \mathbf{x}:=\mathrm{d} x^{j}=\sum{i=1}^{n}\left(\mathbf{e}{i} \cdot \mathbf{e}^{j}\right) \mathrm{d} x^{i} $$ Since the above identity has to hold for arbitrary $\mathrm{d} \mathbf{x}$, comparing the coefficients of $\mathrm{d} x^{i}$ on both sides we obtain $\mathbf{e}{i} \cdot \mathbf{e}^{j}=\delta_{i}^{j}$.

物理代考

$3.2$ 基础向量

首先我们将描述一个$n$维的平面空间。该空间中的任何点都可以由称为其坐标的 $n$ 实数指定,由 $x^{i} 表示,i=1,2,\ldots,n$,其中我们使用上标来标记坐标。请注意,它不是指数,因此应避免任何此类混淆。严格来说,不是笛卡尔坐标系的曲线坐标系不会覆盖整个流形,但笛卡尔坐标系可以。此时,如果空间可以被 $x^{i}$ 完全覆盖,并且相邻点之间的距离由 $x^{i}$ 和 $x^{i}+dx^{ i}$ 由下式给出:

$$

d s^{2}=\eta_{i k} d x^{i} d x^{k}

$$

其中$\eta_{i k}$ 是对称的度量张量,即$\eta_{i k}=\eta_{k i}$ 和$\eta_{i k}$ 是常数。我们将看到这完全等同于第 4 章中关于黎曼张量的平坦空间的描述,其中黎曼张量在整个空间中相同地消失(参见第 4 章中的练习 $4.3$)。通过主轴变换和重新缩放,我们可以使 $\eta_{ik}$ 对角线并且每个对角线条目等于 $\pm 1$。 SR 的时空是 4 维平面空间的一个例子。如果对角线项都是 $+1$,那么 $d s^{2}$ 是平方和,这实际上是毕达哥拉斯定理的重复应用。这样的空间称为欧几里得,度量称为正定空间。 SR 时空有一个不确定的度量,因为这里度量 $\eta_{ik}$ 中的项,在我们采用的约定中,有一个正号和三个负号。我们通常会考虑任何不一定跨越整个空间的曲线坐标系。这不会以任何方式妨碍我们,因为我们只会进行局部分析。如前所述,在平面空间中可以唯一地指定一个点 $\mathbf{x}$,即位置向量,使用 $n$ 坐标 $x^{i}, i=1, \ldots, n$。可以构建“想象的网格线”用于空间坐标的心理可视化。对于给定的 $k$,沿 $x^{k}$ 网格线只有坐标 $x^{k}$ 变化,而其他坐标保持不变。还可以想象 $n-1$ 维超曲面对空间进行切片,这样在每个切片上只有一个坐标保持不变。给定坐标保持不变的表面不应相交,因为这会使空间中的一个点具有相同坐标的两个值,因此不是有效的坐标系。

为了使讨论简单和熟悉,我们将考虑一个正定度量。结果几乎相似,并且很容易推广到不确定的度量。为了用分量来表示这个空间中的向量场 $\mathbf{V}(\mathbf{x})$,我们

40

3 张量代数

需要在空间中的每个点定义 $n$ 个基向量。令$\mathbf{x}$为取决于$n$曲线坐标$x^{i}$的位置向量,即$\mathbf{x}\left(x^{i}\right)$。 $x^{i}$ 形成一个覆盖空间的坐标曲线网格。可以通过两种自然方式选择基向量:

1.沿网格线:如果$i^{\text {th }}$坐标从$x^{i}$变为$x^{i}+\mathrm{d} x^{i}$ , 位置向量 $\mathbf{x}$ 变化的方向(沿着网格线)可以作为 $i^{\text {th }}$ 基向量 $\mathbf{e 的方向}{i}$ 在 $\mathbf{x}$。那是, $$ \mathbf{e}{i}:=\frac{\partial \mathbf{x}}{\partial x^{i}}

$$

因此在几何上,每个 $\mathbf{e}{i}$ 都是坐标曲线 $x^{i}$ 的切线。 2.垂直于切片表面:在$\mathbf{x}$处常数$x^{i}$的表面梯度垂直于表面。这里的基向量是 由于我们在给定点 $\mathbf{x}{\mathbf{0}}$,我们明确地忽略了位置依赖性。但是上面的等式在定义区域的每个点上都是正确的。还要注意向量 $\mathbf{V}$ 的分量上的上标和下标。我们将在下一节讨论这一点。在正交曲线坐标系中,切片表面将以直角相交,并且可以证明上述两组基向量是共同对齐的 $\left(\mathbf{e}{i} \propto \mathbf{e }^{i}\right)$。但是,总的来说,这不是真的。在一般曲线坐标系中,基向量的正交性不成立。然而,以上述方式定义的基向量 $\mathbf{e}^{i}$ 和 $\mathbf{e}{i}$ 形成向量的倒数系统,使得,

$$

\mathbf{e}^{i} \cdot \mathbf{e}{j}=\delta{j}^{i}

$$

其中 $3.2$ 基向量 $\delta_{j}^{i}:=\left{\begin{array}{l}1 \text { if } i=j \ 0 \text { else }\end{array }\对。$

是克罗内克三角函数。非正交曲线坐标系的概念以及基向量如何形成向量的倒数系统,通过 p 上二维斜坐标系的具体例子阐明

物理代考Gravity and Curvature of Space-Time 代写 请认准UprivateTA™. UprivateTA™为您的留学生涯保驾护航。

电磁学代考

物理代考服务:

物理Physics考试代考、留学生物理online exam代考、电磁学代考、热力学代考、相对论代考、电动力学代考、电磁学代考、分析力学代考、澳洲物理代考、北美物理考试代考、美国留学生物理final exam代考、加拿大物理midterm代考、澳洲物理online exam代考、英国物理online quiz代考等。

光学代考

光学(Optics),是物理学的分支,主要是研究光的现象、性质与应用,包括光与物质之间的相互作用、光学仪器的制作。光学通常研究红外线、紫外线及可见光的物理行为。因为光是电磁波,其它形式的电磁辐射,例如X射线、微波、电磁辐射及无线电波等等也具有类似光的特性。

大多数常见的光学现象都可以用经典电动力学理论来说明。但是,通常这全套理论很难实际应用,必需先假定简单模型。几何光学的模型最为容易使用。

相对论代考

上至高压线,下至发电机,只要用到电的地方就有相对论效应存在!相对论是关于时空和引力的理论,主要由爱因斯坦创立,相对论的提出给物理学带来了革命性的变化,被誉为现代物理性最伟大的基础理论。

流体力学代考

流体力学是力学的一个分支。 主要研究在各种力的作用下流体本身的状态,以及流体和固体壁面、流体和流体之间、流体与其他运动形态之间的相互作用的力学分支。

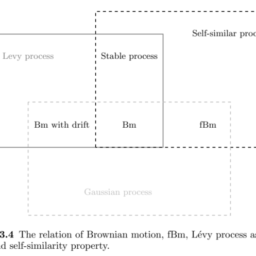

随机过程代写

随机过程,是依赖于参数的一组随机变量的全体,参数通常是时间。 随机变量是随机现象的数量表现,其取值随着偶然因素的影响而改变。 例如,某商店在从时间t0到时间tK这段时间内接待顾客的人数,就是依赖于时间t的一组随机变量,即随机过程