数学代写| Vector optimization 凸分析代考

凸优化代写

4.7 Vector optimization

4.7.1 General and convex vector optimization problems

In $\S 4.6$ we extended the standard form problem (4.1) to include vector-val constraint functions. In this section we investigate the meaning of a vector-val

4.7 Vector optimization

objective function. We denote a general vector optimization problem as minimize (with respect to $K$ ) subject to

$$

\begin{aligned}

&f_{0}(x) \

&f_{i}(x) \leq 0, \quad i=1, \ldots, m \

&h_{i}(x)=0, \quad i=1, \ldots, p

\end{aligned}

$$

175 subject to

$$

\begin{aligned}

&h_{i}(x)=0, \quad i=1, \ldots, p . \

&K \subset \mathbf{R}^{q} \text { is a proper cone, } f_{0} \cdot \mathbf{R}^{n} \rightarrow \mathbf{R}^{q}

\end{aligned}

$$

Here $x \in \mathbf{R}^{n}$ is the optimization variable, $K \subseteq \mathbf{R}^{q}$ is a proper cone, $f_{0}: \mathbf{R}^{n} \rightarrow \mathbf{R}^{q}$ is the objective function, $f_{i}: \mathbf{R}^{n} \rightarrow \mathbf{R}$ are the inequality constraint functions, and $h_{i}: \mathbf{R}^{n} \rightarrow \mathbf{R}$ are the equality constraint functions. The only difference between this problem and the standard optimization problem (4.1) is that here, the objective function takes values in $\mathbf{R}^{q}$, and the problem specification includes a proper cone $K$, which is used to compare objective values. In the context of vector optimization, $K$, which is used to compare objective values. In the context of vector optimization, the standard optimization problem (4.1) is sometimes called a scalar optimization problem.

We say the vector optimization problem (4.56) is a convex vector optimization problem if the objective function $f_{0}$ is $K$-convex, the inequality constraint functions $f_{1}, \ldots, f_{m}$ are convex, and the equality constraint functions $h_{1}, \ldots, h_{p}$ are affine.

(As in the scalar case, we usually express the equality constraints as $A x=b$, where $\left.A \in \mathbf{R}^{p \times n} .\right)$

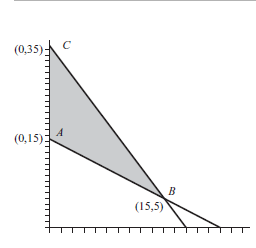

What meaning can we give to the vector optimization problem (4.56)? Suppose $x$ and $y$ are two feasible points (i.e., they satisfy the constraints). Their associated objective values, $f_{0}(x)$ and $f_{0}(y)$, are to be compared using the generalized inequality $\preceq_{K}$. We interpret $f_{0}(x) \preceq_{K} f_{0}(y)$ as meaning that $x$ is ‘better than or equal’ in value to $y$ (as judged by the objective $f_{0}$, with respect to $K$ ). The confusing aspect of vector optimization is that the two objective values $f_{0}(x)$ and $f_{0}(y)$ need not be comparable; we can have neither $f_{0}(x) \preceq_{K} f_{0}(y)$ nor $f_{0}(y) \preceq_{K} f_{0}(x)$, i.e., neither is better than the other. This cannot happen in a scalar objective optimization is better than the other. This cannot happen in a scalar objective optimization problem.

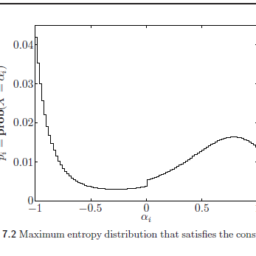

7.2 Optimal points and values

We first consider a special case, in which the meaning of the vector optimization problem is clear. Consider the set of objective values of feasible points,

$$

\mathcal{O}=\left{f_{0}(x) \mid \exists x \in \mathcal{D}, f_{i}(x) \leq 0, i=1, \ldots, m, h_{i}(x)=0, i=1, \ldots, p\right} \subseteq \mathbf{R}^{q}

$$

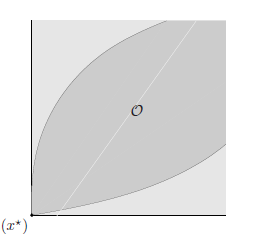

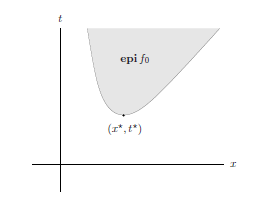

which is called the set of achievable objective values. If this set has a minimum element (see §2.4.2), i.e., there is a feasible $x$ such that $f_{0}(x) \preceq_{K} f_{0}(y)$ for all feasible $y$, then we say $x$ is optimal for the problem (4.56), and refer to $f_{0}(x)$ as the optimal value of the problem. (When a vector optimization problem has an optimal value, it is unique.) If $x^{\star}$ is an optimal point, then $f_{0}\left(x^{\star}\right)$, the objective at $x^{\star}$, can be compared to the objective at every other feasible point, and is better than or equal to it. Roughly speaking, $x^{\star}$ is unambiguously a best choice for $x$, among feasible points.

A point $x^{\star}$ is optimal if and only if it is feasible and

$$

\mathcal{O} \subseteq f_{0}\left(x^{\star}\right)+K

$$

凸优化代考

4.7 向量优化

4.7.1 一般和凸向量优化问题

在 $\S 4.6$ 中,我们扩展了标准形式问题 (4.1) 以包括向量-val 约束函数。在本节中,我们研究 vector-val 的含义

4.7 向量优化

目标函数。我们将一般向量优化问题表示为最小化(相对于 $K$ )受制于

$$

\开始{对齐}

&f_{0}(x) \

&f_{i}(x) \leq 0, \quad i=1, \ldots, m \

&h_{i}(x)=0, \quad i=1, \ldots, p

\end{对齐}

$$

175 受制于

$$

\开始{对齐}

&h_{i}(x)=0, \quad i=1, \ldots, p 。 \

&K \subset \mathbf{R}^{q} \text { 是一个真锥, } f_{0} \cdot \mathbf{R}^{n} \rightarrow \mathbf{R}^{q}

\end{对齐}

$$

这里 $x \in \mathbf{R}^{n}$ 是优化变量,$K \subseteq \mathbf{R}^{q}$ 是一个真锥,$f_{0}: \mathbf{R} ^{n} \rightarrow \mathbf{R}^{q}$ 是目标函数,$f_{i}: \mathbf{R}^{n} \rightarrow \mathbf{R}$ 是不等式约束函数,和 $h_{i}: \mathbf{R}^{n} \rightarrow \mathbf{R}$ 是等式约束函数。这个问题和标准优化问题(4.1)的唯一区别是,这里的目标函数取值$\mathbf{R}^{q}$,问题规范包括一个适当的圆锥$K$,即用于比较客观值。在向量优化的上下文中,$K$,用于比较目标值。在向量优化的上下文中,标准优化问题(4.1)有时被称为标量优化问题。

我们说向量优化问题(4.56)是一个凸向量优化问题,如果目标函数$f_{0}$是$K$-convex,不等式约束函数$f_{1}, \ldots, f_{m}$是凸的,等式约束函数 $h_{1}, \ldots, h_{p}$ 是仿射的。

(在标量情况下,我们通常将等式约束表示为 $A x=b$,其中 $\left.A \in \mathbf{R}^{p \times n} .\right)$

我们可以赋予向量优化问题(4.56)什么含义?假设 $x$ 和 $y$ 是两个可行点(即它们满足约束条件)。它们相关的目标值 $f_{0}(x)$ 和 $f_{0}(y)$ 将使用广义不等式 $\preceq_{K}$ 进行比较。我们将 $f_{0}(x) \preceq_{K} f_{0}(y)$ 解释为 $x$ 在价值上“优于或等于” $y$(由目标 $f_ 判断) {0}$,相对于 $K$ )。矢量优化的令人困惑的方面是两个目标值 $f_{0}(x)$ 和 $f_{0}(y)$ 不需要可比;我们既不能有 $f_{0}(x) \preceq_{K} f_{0}(y)$ 也不能有 $f_{0}(y) \preceq_{K} f_{0}(x)$,即两者都不比另一个更好。这不可能发生在一个标量目标优化比另一个更好的情况下。这不会发生在标量目标优化问题中。

7.2 最佳点和值

我们首先考虑一个特殊情况,其中向量优化问题的意义是明确的。考虑可行点的目标值集,

$$

\mathcal{O}=\left{f_{0}(x) \mid \exists x \in \mathcal{D}, f_{i}(x) \leq 0, i=1, \ldots, m, h_{i}(x)=0, i=1, \ldots, p\right} \subseteq \mathbf{R}^{q}

$$

称为可实现的目标值的集合。如果这个集合有一个最小元素(见第 2.4.2 节),即存在一个可行的 $x$ 使得 $f_{0}(x) \preceq_{K} f_{0}(y)$ 对于所有可行的$y$,那么我们说$x$对于问题(4.56)是最优的,并且将$f_{0}(x)$称为问题的最优值。 (当一个向量优化问题有一个最优值时,它是唯一的。)如果$x^{\star}$是一个最优点,那么$f_{0}\left(x^{\star}\right)$, $x^{\star}$ 处的目标可以与其他每个可行点的目标进行比较,并且优于或等于它。粗略地说,在可行点中,$x^{\star}$ 无疑是 $x$ 的最佳选择。

一个点 $x^{\star}$ 是最优的当且仅当它是可行的并且

$$

\mathcal{O} \subseteq f_{0}\left(x^{\star}\right)+K

$$

数学代写| Vector optimization 凸分析代考 请认准UprivateTA™. UprivateTA™为您的留学生涯保驾护航。

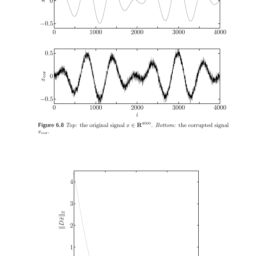

时间序列分析代写

统计作业代写

随机过程代写

随机过程,是依赖于参数的一组随机变量的全体,参数通常是时间。 随机变量是随机现象的数量表现,其取值随着偶然因素的影响而改变。 例如,某商店在从时间t0到时间tK这段时间内接待顾客的人数,就是依赖于时间t的一组随机变量,即随机过程