数学代写|Function fitting and interpolation 凸优化网课代修

凸优化代写

7.1.1 Maximum likelihood estimation

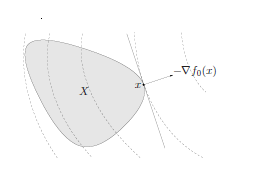

We consider a family of probability distributions on $\mathbf{R}^{m}$, indexed by a vector $x \in \mathbf{R}^{n}$, with densities $p_{x}(\cdot)$. When considered as a function of $x$, for fixed $y \in \mathbf{R}^{m}$, the function $p_{x}(y)$ is called the likelihood function. It is more convenient to work with its logarithm, which is called the log-likelihood function, and denoted $l$ :

$$

l(x)=\log p_{x}(y)

$$

There are often constraints on the values of the parameter $x$, which can represent prior knowledge about $x$, or the domain of the likelihood function. These constraints can be explicitly given, or incorporated into the likelihood function by assigning $p_{x}(y)=0$ (for all $y$ ) whenever $x$ does not satisfy the prior information constraints. (Thus, the log-likelihood function can be assign

parameters $x$ that violate the prior information constraints.) on observing one sample $y$ from the distribution. A widely used method, called maximum likelihood $(M L)$ estimation, is to estimate $x$ as

$$

\hat{x}{\mathrm{ml}}=\operatorname{argmax}{x} p_{x}(y)=\operatorname{argmax}{x} l(x) $$ i.e., to choose as our estimate a value of the parameter that maximizes the likelihood (or log-likelihood) function for the observed value of $y$. If we have prior information about $x$, such as $x \in C \subseteq \mathbf{R}^{n}$, we can add the constraint $x \in C$ explicitly, or impose it implicitly, by redefining $p{x}(y)$ to be zero for $x \notin C$.

The problem of finding a maximum likelihood estimate of the parameter vector $x$ can be expressed as

$$

\begin{array}{ll}

\text { maximize } & l(x)=\log p_{x}(y) \

\text { subject to } & x \in C

\end{array}

$$

where $x \in C$ gives the prior information or other constraints on the parameter vector $x$. In this optimization problem, the vector $x \in \mathbf{R}^{n}$ (which is the parameter

7 Statistical estimation

352

in the probability density) is the variable, and the vector $y \in \mathbf{R}^{m}$ (which is the observed sample) is a problem parameter.

The maximum likelihood estimation problem $(7.1)$ is a convex optimization problem if the log-likelihood function $l$ is concave for each value of $y$, and the set $C$ can be described by a set of linear equality and convex inequality constraints, a situation which occurs in many estimation problems. For these problems we can compute an ML estimate using convex optimization.

Linear measurements with IID noise

We consider a linear measurement model,

$$

y_{i}=a_{i}^{T} x+v_{i}, \quad i=1, \ldots, m,

$$

where $x \in \mathbf{R}^{n}$ is a vector of parameters to be estimated, $y_{i} \in \mathbf{R}$ are the measured or observed quantities, and $v_{i}$ are the measurement errors or noise. We assume that $v_{i}$ are independent, identically distributed (IID), with density $p$ on $\mathbf{R}$. The likelihood function is then

$$

p_{x}(y)=\prod_{i=1}^{m} p\left(y_{i}-a_{i}^{T} x\right)

$$

so the log-likelihood function is

$$

l(x)=\log p_{x}(y)=\sum_{i=1}^{m} \log p\left(y_{i}-a_{i}^{T} x\right) .

$$

The ML estimate is any optimal point for the problem

$$

\text { maximize } \sum_{i=1}^{m} \log p\left(y_{i}-a_{i}^{T} x\right),

$$

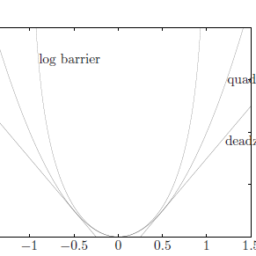

with variable $x$. If the density $p$ is log-concave, this problem is convex, and has the form of a penalty approximation problem ((6.2), page 294), with penalty function – $\log p$.

Conversely, we can interpret any penalty function approximation problem

$$

\text { minimize } \sum_{i=1}^{m} \phi\left(b_{i}-a_{i}^{T} x\right)

$$

as a maximum likelihood estimation problem, with noise density

$$

p(z)=\frac{e^{-\phi(z)}}{\int e^{-\phi(u)} d u}

$$

and measurements $b$. This observation gives a statistical interpretation of the penalty function approximation problem. Suppose, for example, that the penalty function $\phi$ grows very rapidly for large values, which means that we attach a very large cost or penalty to large residuals. The corresponding noise density function $p$ will have very small tails, and the ML estimator will avoid (if possible) estimates with any large residuals because these correspond to very unlikely events.

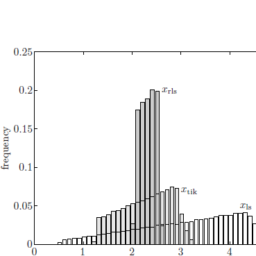

We can also understand the robustness of $\ell_{1}$-norm approximation to large errors in terms of maximum likelihood estimation. We interpret $\ell_{1}$-norm approximation as maximum likelihood estimation with a noise density that is Laplacian; $\ell_{2}$-norm approximation is maximum likelihood estimation with a Gaussian noise density. The Laplacian density has larger tails than the Gaussian, i.e., the probability of a very large $v_{i}$ is far larger with a Laplacian than a Gaussian density. As a result, the associated maximum likelihood method expects to see greater numbers of large residuals.

Counting problems with Poisson distribution

In a wide variety of problems the random variable $y$ is nonnegative integer valued, with a Poisson distribution with mean $\mu>0$ :

$$

\operatorname{prob}(y=k)=\frac{e^{-\mu} \mu^{k}}{k !}

$$

Often $y$ represents the count or number of events (such as photon arrivals, traffic accidents, etc.) of a Poisson process over some period of time.

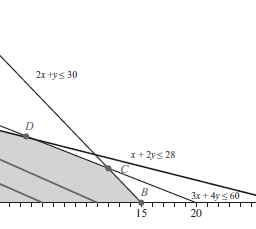

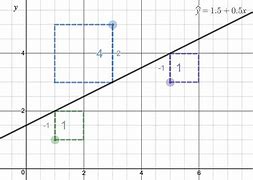

In a simple statistical model, the mean $\mu$ is modeled as an affine function of a vector $u \in \mathbf{R}^{n}$ :

$$

\mu=a^{T} u+b .

$$

Here $u$ is called the vector of explanatory variables, and the vector $a \in \mathbf{R}^{n}$ and number $b \in \mathbf{R}$ are called the model parameters. For example, if $y$ is the number of traffic accidents in some region over some period, $u_{1}$ might be the total traffic flow through the region during the period, $u_{2}$ the rainfall in the region during the period, and so on.

We are given a number of observations which consist of pairs $\left(u_{i}, y_{i}\right), i=$ $1, \ldots, m$, where $y_{i}$ is the observed value of $y$ for which the value of the explanatory variable is $u_{i} \in \mathbf{R}^{n}$. Our job is to find a maximum likelihood estimate of the model parameters $a \in \mathbf{R}^{n}$ and $b \in \mathbf{R}$ from these data.

凸优化代考

7.1.1 最大似然估计

我们考虑 $\mathbf{R}^{m}$ 上的一系列概率分布,由向量 $x \in \mathbf{R}^{n}$ 索引,密度为 $p_{x}(\cdot)美元。当考虑作为 $x$ 的函数时,对于固定的 $y \in \mathbf{R}^{m}$,函数 $p_{x}(y)$ 称为似然函数。使用它的对数更方便,称为对数似然函数,记为 $l$ :

$$

l(x)=\log p_{x}(y)

$$

参数 $x$ 的值通常存在约束,它可以表示关于 $x$ 的先验知识,或似然函数的域。这些约束可以明确地给出,或者通过在 $x$ 不满足先验信息约束时分配 $p_{x}(y)=0$(对于所有 $y$)来合并到似然函数中。 (因此,可以指定对数似然函数

参数 $x$ 违反先验信息约束。)从分布中观察一个样本 $y$。一种广泛使用的方法,称为最大似然 $(M L)$ 估计,是将 $x$ 估计为

$$

\hat{x}{\mathrm{ml}}=\operatorname{argmax}{x} p_{x}(y)=\operatorname{argmax}{x} l(x) $$ 即,选择一个参数值作为我们的估计值,该值使观测值 $y$ 的似然(或对数似然)函数最大化。如果我们有关于 $x$ 的先验信息,例如 $x \in C \subseteq \mathbf{R}^{n}$,我们可以显式地添加约束 $x \in C$,或者通过重新定义隐式地强加它对于 $x \notin C$,$p{x}(y)$ 为零。

寻找参数向量 $x$ 的最大似然估计的问题可以表示为

$$

\开始{数组}{ll}

\text { 最大化 } & l(x)=\log p_{x}(y) \

\text { 服从 } & x \in C

\结束{数组}

$$

其中 $x \in C$ 给出了参数向量 $x$ 的先验信息或其他约束。在这个优化问题中,向量 $x \in \mathbf{R}^{n}$(即参数

7 统计估计

352

在概率密度中)是变量,向量 $y \in \mathbf{R}^{m}$(即观察到的样本)是问题参数。

最大似然估计问题 $(7.1)$ 是一个凸优化问题,如果对数似然函数 $l$ 对于 $y$ 的每个值都是凹的,并且集合 $C$ 可以由一组线性等式和凸不等式约束,这种情况出现在许多估计问题中。对于这些问题,我们可以使用凸优化计算 ML 估计。

具有 IID 噪声的线性测量

我们考虑一个线性测量模型,

$$

y_{i}=a_{i}^{T} x+v_{i}, \quad i=1, \ldots, m,

$$

其中 $x \in \mathbf{R}^{n}$ 是要估计的参数向量,$y_{i} \in \mathbf{R}$ 是测量或观察到的量,$v_{i} $ 是测量误差或噪声。我们假设 $v_{i}$ 是独立的同分布 (IID),在 $\mathbf{R}$ 上具有密度 $p$。那么似然函数是

$$

p_{x}(y)=\prod_{i=1}^{m} p\left(y_{i}-a_{i}^{T} x\right)

$$

所以对数似然函数是

$$

l(x)=\log p_{x}(y)=\sum_{i=1}^{m} \log p\left(y_{i}-a_{i}^{T} x\right) 。

$$

ML 估计是问题的任何最佳点

$$

\text { 最大化 } \sum_{i=1}^{m} \log p\left(y_{i}-a_{i}^{T} x\right),

$$

带有变量 $x$。如果密度 $p$ 是对数凹的,那么这个问题是凸的,并且具有惩罚近似问题的形式((6.2),第 294 页),带有惩罚函数 – $\log p$。

相反,我们可以解释任何惩罚函数逼近问题

$$

\text { 最小化 } \sum_{i=1}^{m} \phi\left(b_{i}-a_{i}^{T} x\right)

$$

作为最大似然估计问题,具有噪声密度

$$

p(z)=\frac{e^{-\phi(z)}}{\int e^{-\phi(u)} d u}

$$

和测量 $b$。这个观察给出了惩罚函数逼近问题的统计解释。例如,假设惩罚函数 $\phi$ 对于大值增长非常迅速,这意味着我们将非常大的成本或惩罚附加到大残差上。相应的噪声密度函数 $p$ 将具有非常小的尾部,并且 ML 估计器将避免(如果可能)具有任何大残差的估计,因为这些对应于非常不可能的事件。

我们还可以理解 $\ell_{1}$-norm 近似在最大似然估计方面对大误差的鲁棒性。我们将 $\ell_{1}$-norm 近似解释为噪声密度为拉普拉斯算子的最大似然估计; $\ell_{2}$-norm 近似是具有高斯噪声密度的最大似然估计。拉普拉斯密度的尾部比高斯密度大,即非常大的 $v_{i}$ 的概率与拉普拉斯密度相比远大于高斯密度。因此,相关的最大似然法预计会看到更多的大残差。

泊松分布的计数问题

在各种各样的问题中,随机变量 $y$ 是非负整数值,具有平均 $\mu>0$ 的泊松分布:

$$

\operatorname{概率}(y=k)=\frac{e^{-\mu} \mu^{k}}{k !}

$$

通常 $y$ 代表$$

\mu=a^{T} u+b 。

$$

这里$u$称为解释变量向量,向量$a \in \mathbf{R}^{n}$和数字$b \in \mathbf{R}$称为模型参数。例如,如果 $y$ 是某个地区在某个时期内的交通事故数量,则 $u_{1}$ 可能是该地区期间的总交通流量,$u_{2}$ 可能是该地区的降雨量期间,以此类推。

我们得到了许多观察值,它们由对 $\left(u_{i}, y_{i}\right), i=$ $1, \ldots, m$ 组成,其中 $y_{i}$ 是观察值$y$ 的解释变量的值为 $u_{i} \in \mathbf{R}^{n}$。我们的工作是从这些数据中找到模型参数 $a \in \mathbf{R}^{n}$ 和 $b \in \mathbf{R}$ 的最大似然估计。

数学代写| Chebyshev polynomials 数值分析代考 请认准UprivateTA™. UprivateTA™为您的留学生涯保驾护航。

时间序列分析代写

统计作业代写

随机过程代写

随机过程,是依赖于参数的一组随机变量的全体,参数通常是时间。 随机变量是随机现象的数量表现,其取值随着偶然因素的影响而改变。 例如,某商店在从时间t0到时间tK这段时间内接待顾客的人数,就是依赖于时间t的一组随机变量,即随机过程